55 Snowflake interview questions (+ sample answers) to assess candidates’ data skills

If your organization’s teams are using Snowflake for their large-scale data analytics projects, you need to assess candidates’ proficiency with this tool quickly and efficiently.

What’s the best way to do this, though?

With the help of skills tests and interviews. Use our Snowflake test to make sure candidates have sufficient experience with data architecture and SQL. Combine it with other tests for an in-depth talent assessment and then invite those who perform best to an interview.

During interviews, ask candidates targeted Snowflake interview questions to further evaluate their skills and knowledge. Whether you’re hiring a database administrator , data engineer , or data analyst , this method will enable you to identify the best person for the role.

To help you prepare for the interviews, we’ve prepared a list of 55 Snowflake interview questions you can ask candidates, along with sample answers to 25 of them.

Table of contents

Top 25 snowflake interview questions to evaluate applicants’ proficiency, extra 30 snowflake interview questions you can ask candidates, use skills tests and structured interviews to hire the best data experts.

In this section, you’ll find the best 25 interview questions to assess candidates’ Snowflake skills. We’ve also included sample answers, so that you know what to look for, even if you have no experience with Snowflake yourself.

1. What is Snowflake? How is it different from other data warehousing solutions?

Snowflake is a cloud-based data warehousing service for data storage, processing, and analysis.

Traditional data warehouses that often require significant hardware management and tuning. Contrary to that, Snowflake separates computing and storage. This means you can adjust computing power and storage based on your needs without impacting each other and without requiring costly hardware upgrades.

Snowflake supports multi-cloud environments, meaning that businesses can use different cloud providers and switch between them easily.

2. What are virtual warehouses in Snowflake? Why are they important?

Virtual warehouses in Snowflake are separate compute clusters that perform data processing tasks. Each virtual warehouse operates independently, which means they do not share compute resources with each other.

This architecture is crucial because it allows multiple users or teams to run queries simultaneously without affecting each other's performance. The ability to scale warehouses on demand enables users to manage and allocate resources based on their needs and reduce costs.

3. Explain the data sharing capabilities of Snowflake.

Snowflake's data sharing capabilities enable the secure and governed sharing of data in real time across different Snowflake accounts, without copying or transferring the data itself.

This is useful for organizations that need to collaborate with clients or external partners while maintaining strict governance and security protocols. Users can share entire databases and schemas, or specific tables and views.

4. What types of data can Snowflake store and process?

Snowflake is designed to handle a wide range of data types, from structured data to semi-structured data such as JSON, XML, and Avro.

Snowflake eliminates the need for a separate NoSQL database to handle semi-structured data, because it can natively ingest, store, and directly query semi-structured data using standard SQL. This simplifies the data architecture and enables analysts to work with different data types within the same platform.

5. What is Time Travel in Snowflake? How would you use it?

Time Travel in Snowflake allows users to access historical data at any point within a defined retention period and up to 90 days depending on your Snowflake Edition.

Candidates should point out that this is useful for recovering data that was accidentally modified or deleted and also for conducting audits on historical data. This minimizes human errors and guarantees the integrity of your data.

6. Explain how Snowflake processes queries using micro-partitions.

Snowflake automatically organizes table data into micro-partitions. Each micro-partition contains information about the data range, min/max values, and other metrics. Snowflake uses this metadata to eliminate unnecessary data scanning. This speeds up retrieval, reduces resource usage, and improves the performance of queries.

7. Describe a data modeling project you executed in Snowflake.

Here, expect candidates to discuss a recent Snowflake project in detail, talk about the schemas they used, challenges they encountered, and results they obtained.

For example, skilled candidates might explain how they used Snowflake to support a major data-analytics project, using star and snowflake schemas to organize data. They could mention how they optimized models for query performance and flexibility, using Snowflake’s capabilities to handle structured and semi-structured data.

8. How do you decide when to use a star schema versus a snowflake schema in your data warehouse design?

The choice between a star schema and a snowflake schema depends on the specific requirements of the project:

A star schema is simpler and offers better query performance because it minimizes the number of joins needed between tables. It’s effective for simpler or smaller datasets where query speed is crucial.

A snowflake schema involves more normalization which reduces data redundancy and can lead to storage efficiencies. This schema is ideal when the dataset is complex and requires more detailed analysis.

The decision depends on balancing the need for efficient data storage versus fast query performance.

9. Explain how to use Resource Monitors in Snowflake.

Resource Monitors track and control the consumption of compute resources, ensuring that usage stays within budgeted limits. They’re particularly useful in multi-team environments and users can set them up for specific warehouses or the entire account.

Candidates should explain that to use them, they would:

Define a resource monitor

Set credit or time-based limits

Specify actions for when resource usage reaches or exceeds those limits

Actions can include sending notifications, suspending virtual warehouses, or even shutting them down.

10. What are some methods to improve data loading performance in Snowflake?

There are several ways to improve data loading performance in Snowflake:

File sizing: Using larger files – within a 100 MB-250 MB range – reduces the overhead of handling many small files

File formats: Efficient, compressed, columnar file formats like Parquet or ORC enhance loading speeds

Concurrency: Running multiple COPY commands in parallel can maximize throughput

Remove unused columns: Pre-processing files to remove unnecessary columns before loading can reduce the volume of transferred and processed data

11. How would you scale warehouses dynamically based on load?

Candidates should mention Snowflake’s auto-scale feature, which automatically adjusts the number of clusters in a multi-cluster warehouse based on the current workload.

This feature allows Snowflake to add additional compute resources when there is an increase in demand, f.e. during heavy query loads, and to remove these resources when the demand decreases.

12. Explain the role of Streams and Tasks in Snowflake.

Streams in Snowflake capture data manipulation language (DML) changes to tables, such as INSERTs, UPDATEs, and DELETEs. This enables users to see the history of changes to data.

Tasks in Snowflake enable users to schedule automated SQL statements, often using the changes tracked by Streams.

Together, Streams and Tasks can automate and orchestrate incremental data processing workflows, such as transforming and loading data into target tables.

13. Can you integrate Snowflake with a data lake?

Yes, it’s possible to integrate Snowflake with a data lake. This typically involves using Snowflake’s external tables to query data stored in external storage solutions like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage.

This setup enables users to leverage the scalability of data lakes for raw data storage while using the platform’s powerful computing capabilities for querying and analyzing the data without moving it into Snowflake.

14. How would you set up a data lake integration using Snowflake’s external tables?

Use this question to follow up on the previous one. Candidates should outline the following steps for the successful integration of a data lake with external tables:

Ensure data is stored in a supported cloud storage solution

Define an external stage in Snowflake that points to the location of the data-lake files

Create external tables that reference this stage

Specify the schema based on the data format (like JSON or Parquet)

This setup enables users to query the content of the data lake directly using SQL without importing data into Snowflake.

15. What are the encryption methods that Snowflake uses?

Snowflake uses multiple layers of encryption to secure data:

All data stored in Snowflake, including backups and metadata, is encrypted using AES-256 strong encryption

Snowflake manages encryption keys using a hierarchical key model , with each level of the hierarchy having a different rotation and scope policy

Snowflake supports customer-managed keys , where customers can control the key rotation and revocation policies independently

16. How would you audit data access in Snowflake?

Snowflake provides different tools for auditing data access:

The Access History function enables you to track who accessed what data and when

Snowflake’s role-based access control enables you to review and manage who has permission to access what data, further enhancing your auditing capabilities

Third-party tools and services can help monitor, log, and analyze access patterns

17. Describe how Snowflake complies with GDPR.

Knowledgeable candidates will know that Snowflake supports GDPR compliance by:

Ensuring data encryption at rest and in transit

Offering fine-grained access controls

Providing data-lineage features which are essential for tracking data processing

Enabling users to choose data storage in specific geographical regions to comply with data residency requirements

If you need to evaluate candidates’ GDPR knowledge , you can use our GDPR and Data Privacy test .

18. What was the most complex data workflow you automated using Snowflake?

Expect candidates to explain how they automated a complex workflow in the past; for example, they might have created an analytics platform for large-scale IoT data or a platform handling financial data.

Look for details on the challenges they faced and the specific ways they tackled them to process and transform the data. Which ones of Snowflake’s features did they use? What external integrations did they put in place? How did they share access with different users? How did they maintain data integrity?

19. How can you use Snowflake to support semi-structured data?

Snowflake is excellent at handling semi-structured data such as JSON, XML, and CSV files. It can ingest semi-structured data directly without requiring a predefined schema, storing this data in a VARIANT type column.

Data engineers can then use Snowflake’s powerful SQL engine to query and transform this data just like structured data.

This is particularly useful for cases where the data schema can evolve over time. Snowflake dynamically parses and identifies the structure when queried, simplifying data management and integration.

20. Explain how Snowflake manages data concurrency.

To manage data concurrency, Snowflake uses its multi-cluster architecture, where each virtual warehouse operates independently. This enables multiple queries to run simultaneously without a drop in the overall performance.

Additionally, Snowflake uses locking mechanisms and transaction isolation levels to ensure data integrity and consistency across all concurrent operations.

21. How do you configure and optimize Snowpipe for real-time data ingestion in Snowflake?

Snowpipe is Snowflake's service for continuously loading data as soon as it arrives in a cloud storage staging area.

To configure Snowpipe, you need to define a pipe object in Snowflake that specifies the source data files and the target table. To optimize it, you need to make sure that files are close to Snowflake’s recommended size (between 10 MB to 100 MB) and use auto-ingest, which triggers data loading automatically when new files are detected.

22. What are the implications of using large result sets in Snowflake?

Handling large result sets in Snowflake can lead to increased query execution times and higher compute costs.

To manage this effectively, data engineers can:

Reuse previously computed results through result set caching

Optimize query design to filter unnecessary data early in the process

Use approximate aggregation functions

Partition and cluster the data

23. What is the difference between using a COPY INTO command versus INSERT INTO for loading data into Snowflake?

Candidates should explain the following differences:

COPY INTO is best for the high-speed bulk loading of external data from files into a Snowflake table and for large volumes of data. It directly accesses files stored in cloud storage, like AWS S3 or Google Cloud Storage.

INSERT INTO is used for loading data row-by-row or from one table to another within Snowflake. It’s suitable for smaller datasets or in case the data is already in the database.

COPY INTO is generally much faster and cost-effective for large data loads compared to INSERT INTO.

24. How do you automate Snowflake operations using its REST API?

Automating Snowflake operations using its REST API involves programmatically sending HTTP requests to perform a variety of tasks such as executing SQL commands, managing database objects, and monitoring performance.

Users can integrate these API calls into custom scripts or applications to automate data loading, user management, and resource monitoring, for example.

25. What are some common APIs used with Snowflake?

Snowflake supports several APIs that enable integration and automation. Examples include:

REST API , enabling users to automate administrative tasks, such as managing users, roles, and warehouses, as well as executing SQL queries

JDBC and ODBC APIs , enabling users to connect traditional client applications to Snowflake and query and manipulate data from them

Use our Creating REST API test to evaluate candidates’ ability to follow generally accepted REST API standards and guidelines.

Need more ideas? Below, you’ll find 30 additional interview questions you can use to assess applicants’ Snowflake skills.

Explain the concept of Clustering Keys in Snowflake.

How can you optimize SQL queries in Snowflake for better performance?

How do you ensure that your SQL queries are cost-efficient in Snowflake?

What are the best practices for data loading into Snowflake?

How do you perform data transformations in Snowflake?

How do you monitor and manage virtual warehouse performance in Snowflake?

What strategies would you use to manage compute costs in Snowflake?

Can you explain the importance of Continuous Data Protection in Snowflake?

How would you implement data governance in Snowflake?

What tools and techniques do you use for error handling and debugging in Snowflake?

How would you handle large scale data migrations to Snowflake?

What are Materialized Views in Snowflake and how do they differ from standard views?

Describe how you integrate Snowflake with external data sources.

How does Snowflake integrate with ETL tools?

What BI tools have you integrated with Snowflake and how?

How do you implement role-based access control in Snowflake?

What steps would you take to secure sensitive data in Snowflake?

Share an experience where you optimized a Snowflake environment for better performance.

Describe a challenging problem you solved using Snowflake.

How have you used Snowflake's scalability features in a project?

Can you describe a project where Snowflake significantly impacted business outcomes?

What challenges do you foresee in scaling data operations in Snowflake in the coming years?

What are your considerations when setting up failover and disaster recovery in Snowflake?

How would you use Snowflake for real-time analytics?

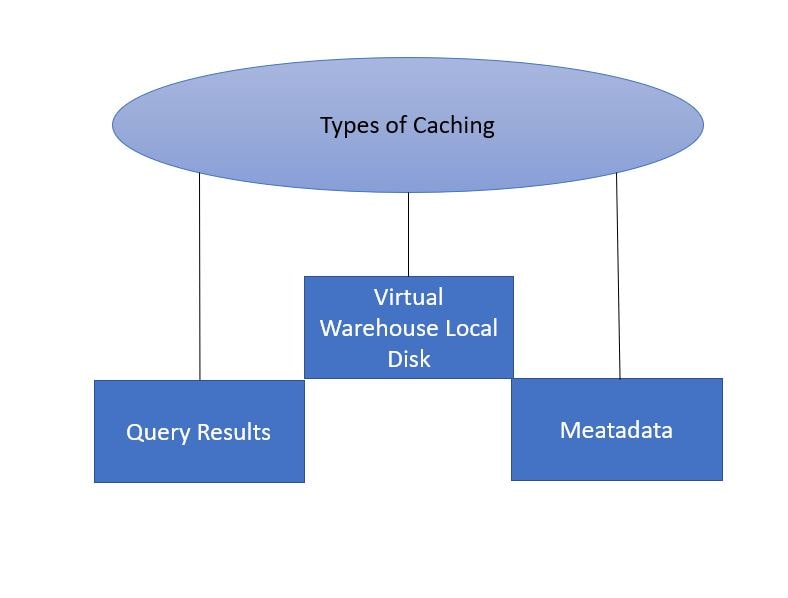

Explain how to use caching in Snowflake to improve query performance.

How do you manage and troubleshoot warehouse skew in Snowflake?

Describe the process of configuring data retention policies in Snowflake.

Explain how Snowflake's query compilation works and its impact on performance.

Describe how to implement end-to-end encryption in a Snowflake environment.

Provide an example of using Snowflake for a data science project involving large-scale datasets.

You can also use our interview questions for DBAs , data engineers , or data analysts .

To identify and hire the best data professionals, you need a robust recruitment funnel that lets you narrow down your list of candidates quickly and efficiently.

For this, you can use our Snowflake test , in combination with other skills tests. Then, you simply need to invite the best talent to an interview, where you ask them targeted Snowflake interview questions like the ones above to further assess their proficiency.

This skills-based approach to hiring yields better results than resume screening, according to more than 70% of employers .

Sign up for a free live demo to chat with one of our team members and find out more about the benefits of skills-first hiring – or sign up for our Free forever plan to build your first assessment today.

Related posts

TestGorilla vs. Topgrading

70 payroll interview questions to hire skilled payroll staff

Why do younger generations prefer skills-based hiring?

Hire the best candidates with TestGorilla

Create pre-employment assessments in minutes to screen candidates, save time, and hire the best talent.

Latest posts

The best advice in pre-employment testing, in your inbox.

No spam. Unsubscribe at any time.

Hire the best. No bias. No stress.

Our screening tests identify the best candidates and make your hiring decisions faster, easier, and bias-free.

Free resources

This checklist covers key features you should look for when choosing a skills testing platform

This resource will help you develop an onboarding checklist for new hires.

How to assess your candidates' attention to detail.

Learn how to get human resources certified through HRCI or SHRM.

Learn how you can improve the level of talent at your company.

Learn how CapitalT reduced hiring bias with online skills assessments.

Learn how to make the resume process more efficient and more effective.

Improve your hiring strategy with these 7 critical recruitment metrics.

Learn how Sukhi decreased time spent reviewing resumes by 83%!

Hire more efficiently with these hacks that 99% of recruiters aren't using.

Make a business case for diversity and inclusion initiatives with this data.

- System Design

- Data Modeling

- Amazon Web Services

- SQL Tutorial (FREE)

- DE End-to-End Projects (FREE)

- Personalized training

15 Common Snowflake Interview Questions

Stepping into the data engineering interviews, particularly for a platform as specialized as Snowflake, can be both an exciting and daunting prospect. Whether you’re a seasoned data engineer or just starting your data journey, understanding the nuances of Snowflake is key to acing your interview.

This article presents 15 common Snowflake interview questions that encapsulate the breadth of knowledge and depth of understanding expected from candidates. From fundamental concepts to complex scenarios, these questions are carefully selected to prepare you for the multifaceted challenges of a Snowflake interview.For beginners, this is your interview guide for Snowflake. For all aspirants, Data Engineer Academy stands beside you, offering insights, resources, and support to turn the challenge of an interview into the opportunity of a career.

What to Expect in a Snowflake Interview

Following the preliminaries, the interviewer will often move on to the core technical portion. Here, you might encounter a series of questions that start at a high level, focusing on Snowflake’s distinct architecture and its benefits over traditional data warehouses. Be ready to discuss its unique capabilities, like handling semi-structured data and automatic scaling.

The format often includes a practical component, such as a live coding exercise or a case study review, where your problem-solving skills and ability to navigate Snowflake’s interface are put to the test. These exercises aim to simulate real-world scenarios, assessing your competence in applying Snowflake solutions to business requirements.

Finally, the interview will typically conclude with a chance for you to ask questions. This is your opportunity to show your interest in the company’s use of Snowflake and to clarify any specifics about the role you’re applying for. Throughout the process, interviewers are looking for clear communication, a solid understanding of data engineering principles, and specific expertise in managing and leveraging the Snowflake platform.

15 Snowflake Interview Questions – Expert Opinion

How would you design a Snowflake schema to optimize storage and query performance for a large-scale e-commerce platform’s transactional data?

To optimize storage and query performance, I’d design the schema focusing on efficient data partitioning and clustering. Partitioning tables by transaction date allows for better management of historical data. Implementing clustering keys based on commonly queried attributes, like customer ID or product category, optimizes query performance by reducing scan times

Explain the process of using Snowpipe for real-time data ingestion. How does it differ from traditional batch-loading methods in Snowflake?

Snowpipe enables continuous, near-real-time data ingestion by automatically loading data as soon as it’s available in a cloud storage staging area. This differs from traditional batch loading, where data is loaded at predefined intervals. Snowpipe’s real-time processing capability ensures that data is readily available for analysis without significant delay.

Describe how you would implement time travel and zero-copy cloning in Snowflake to create a point-in-time snapshot of your data for analysis.

Time Travel allows you to access historical data up to 90 days in the past, enabling point-in-time analysis without additional data replication. Zero-copy cloning complements this by creating instant, read-only database clones without duplicating data, and facilitating parallel environments for development or testing without affecting the primary datasets.

Given a scenario where query performance starts to degrade as data volume grows, what steps would you take to diagnose and optimize the queries in Snowflake?

When query performance degrades, I’d start by examining the query execution plan to identify bottlenecks. Utilizing Snowflake’s QUERY_HISTORY function can help pinpoint inefficient queries. From there, I’d consider re-clustering data, revising the query for performance, or resizing the virtual warehouse to match the workload.

How can you utilize Snowflake’s virtual warehouses to manage and scale compute resources effectively for varying workloads? Provide a real-world example.

Virtual warehouses can be scaled up or down based on workload demands. For varying workloads, implementing auto-scaling or using separate warehouses for different workloads ensures that compute resources are efficiently managed. For example, a separate, smaller warehouse could handle continuous ETL jobs, while a larger one is used for ad-hoc analytical queries.

Discuss the role of Caching in Snowflake and how it affects query performance. Can you force a query to bypass the cache?

Caching significantly improves query performance by storing the results of previously executed queries. For repeat queries, Snowflake serves results from the cache. To bypass the cache, you can use the ‘ALTER SESSION SET USE_CACHED_RESULT = FALSE;’ command, ensuring queries are executed afresh

Describe a strategy to implement data sharing between two Snowflake accounts, ensuring data security and governance are maintained.

For secure data sharing, I’d use Snowflake’s Secure Data Sharing feature, allowing direct sharing of data without copying or moving it. Setting up resource monitors and role-based access control ensures that shared data is accessed following data governance policies.

How would you configure Snowflake to handle structured and semi-structured data (e.g., JSON, XML) from IoT devices in a smart home ecosystem?

Snowflake natively supports semi-structured data types like JSON and XML. I’d configure FILE FORMAT objects to specify how these data types are parsed and loaded into Snowflake, using VARIANT columns to store the semi-structured data. This enables querying the data directly using SQL, leveraging Snowflake’s parsing functions.

Explain the approach you would take to migrate an existing data warehouse to Snowflake, including how you would handle the ETL processes.

For data warehouse migration, I’d first perform an assessment of the existing schema and data. The next step involves using Snowflake’s Database Replication and Failover features for the data migration, followed by transforming existing ETL processes to leverage Snowflake’s ELT capabilities, and optimizing them for Snowflake’s architecture.

In Snowflake, how do you manage and monitor resource usage to stay within budget while ensuring performance is not compromised?

To manage resources and stay within budget, I’d implement Snowflake’s resource monitors to track and limit consumption. Additionally, using smaller virtual warehouses for routine tasks and reserving larger warehouses for compute-intensive operations helps balance performance and cost.

Describe the best practices for setting up Role-Based Access Control (RBAC) in Snowflake to ensure data security and compliance.

Implementing RBAC involves defining roles that correspond to job functions and assigning minimum required privileges to each role. Regularly auditing access and privileges ensures compliance and data security. Utilizing Snowflake’s access history and integrating it with third-party security services can enhance governance.

How would you leverage Snowflake’s support for ANSI SQL to perform complex transformations and analytics on the fly?

Snowflake’s full support for ANSI SQL means complex transformations and analytics can be performed directly on the data without specialized syntax. I’d leverage window functions, CTEs, and aggregation functions for in-depth analytics, ensuring SQL queries are optimized for Snowflake’s architecture.

Discuss how you would use Snowflake’s external tables feature to query data directly in an external cloud storage service (e.g., AWS S3, Azure Blob Storage).

External tables allow querying data directly in a cloud storage service without loading it into Snowflake. This is particularly useful for ETL processes and data lakes. Configuring file format options and storage integration objects enables seamless access to data stored in AWS S3 or Azure Blob Storage.

Provide an example of how you would use Snowflake’s Materialized Views to improve the performance of frequently executed, complex aggregation queries.

Materialized Views store pre-computed results of complex queries, significantly speeding up query performance. I’d identify frequently executed queries, especially those with heavy aggregations, and create materialized views to store the results, ensuring they are refreshed regularly to maintain accuracy.

Explain how you would use Snowflake’s multi-cluster warehouses for a high-demand analytics application to ensure availability and performance during peak times.

For applications with high demand, multi-cluster warehouses provide the necessary compute resources by automatically adding clusters to handle concurrent queries, ensuring performance isn’t compromised. Regular monitoring and adjusting the warehouse size based on demand ensures optimal performance and cost management.

Advanced Snowflake Interview Questions

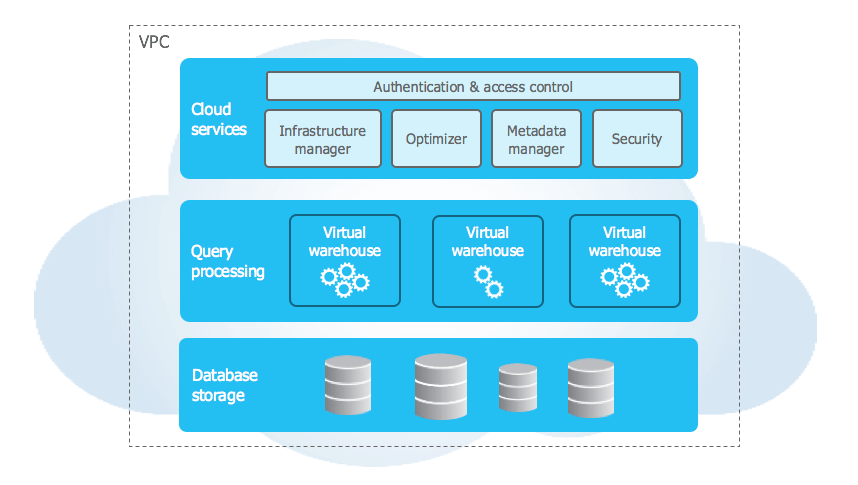

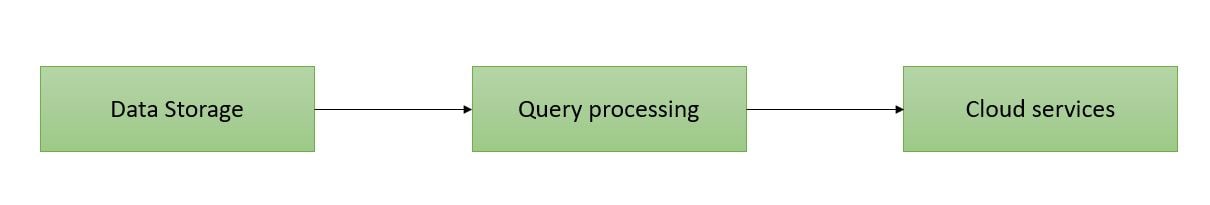

Explain Snowflake’s architecture and how it separates storage and compute. How does this design benefit data processing?

Snowflake’s architecture is built on three layers: Database Storage, Query Processing, and Cloud Services. This separation allows for scalable, concurrent query processing, with storage being independently scalable from compute resources. The benefits include improved performance, elasticity, and cost efficiency, as users only pay for what they use.

How does Snowflake handle data partitioning, and what are micro-partitions?

Snowflake uses micro-partitions, which are automatically managed by the system. These are small chunks of data that are stored columnar-wise, compressed, and organized for efficient access. This approach eliminates the need for manual partitioning and improves query performance through automatic clustering.

Describe the process and benefits of using Snowpipe for continuous data ingestion.

Snowpipe allows for the continuous ingestion of data into Snowflake by automating the loading process. It leverages cloud messaging services to detect new data files as they arrive in a staging area. The benefits include near real-time data availability, reduced latency, and the ability to handle high-frequency data loads.

What is a Snowflake Time Travel and how can it be used to recover data?

Time Travel in Snowflake allows users to query historical data at any point within a defined retention period. It can be used to recover from accidental data modifications or deletions by creating clones of tables or databases as they existed at a specific time.

Discuss the role and configuration of clustering keys in Snowflake. When would you manually define a clustering key?

Clustering keys are used to improve query performance by organizing the data on specific columns. While Snowflake automatically manages clustering, the manual definition of clustering keys is beneficial for large tables where queries frequently filter or aggregate on specific columns, reducing scan times and improving efficiency.

Explain how data sharing works in Snowflake and provide a use case where this feature is beneficial.

Data sharing in Snowflake allows organizations to share read-only access to their data with other Snowflake users without physically moving the data. A use case would be a retailer sharing sales data with suppliers for inventory management and demand forecasting, enabling real-time collaboration and decision-making.

How does Snowflake handle semi-structured data, and what are VARIANT data types?

Snowflake supports semi-structured data formats like JSON, Avro, ORC, and Parquet. The VARIANT data type allows storage and querying of this data without needing a fixed schema. This flexibility is useful for handling dynamic and nested data structures commonly found in modern applications.

Describe the process of using Snowflake Streams and Tasks for managing change data capture (CDC).

Snowflake Streams track changes to a table (INSERT, UPDATE, DELETE) and store these changes for later use. Tasks can be used to schedule and automate the execution of SQL statements, enabling the implementation of CDC workflows. Together, they help keep data synchronized across systems and maintain data integrity.

What are the best practices for securing data in Snowflake?

Best practices include:

- Implementing role-based access control (RBAC) to ensure users have the minimum necessary permissions.

- Using multi-factor authentication (MFA) for additional security.

- Encrypting data at rest and in transit.

- Regularly auditing and monitoring access logs and activities.

- Leveraging Snowflake’s integration with external security tools and services for comprehensive security management.

How do you optimize the performance of a Snowflake data warehouse?

Performance optimization techniques include:

- Using the correct warehouse size for the workload.

- Defining appropriate clustering keys.

- Regularly monitoring and tuning queries.

- Using caching effectively.

- Minimizing the use of SELECT * queries.

- Leveraging Snowflake’s query profiling and optimization tools.

Explain the concept of zero-copy cloning in Snowflake. How does it differ from traditional data copying methods?

Zero-copy cloning allows the creation of clones (copies) of databases, schemas, or tables without duplicating the data physically. This is achieved by using metadata pointers, making the process instantaneous and storage-efficient. Unlike traditional methods that require time and storage for data duplication, zero-copy cloning is faster and more cost-effective.

Describe the role and benefits of Snowflake’s data marketplace

Snowflake’s data marketplace allows users to discover, access, and share live data across different organizations seamlessly. The benefits include access to a diverse range of datasets for enriching analytics, simplified data sharing without ETL processes, and fostering collaboration between data providers and consumers.

What strategies can be employed for efficient cost management in Snowflake?

Cost management strategies include:

- Monitoring and optimizing warehouse usage.

- Using resource monitors to track and control spending.

- Scheduling warehouse auto-suspend and auto-resume to avoid unnecessary costs.

- Using the appropriate storage tier for data (standard vs. large).

- Implementing data retention policies to manage historical data cost-effectively.

How does Snowflake support data governance, and what features facilitate compliance with regulatory requirements?

Snowflake supports data governance through features such as:

- Role-based access control (RBAC) for data security.

- Data masking policies to protect sensitive information.

- Comprehensive audit logs for tracking data access and modifications.

- Integration with external governance tools and compliance frameworks (e.g., GDPR, HIPAA).

- Time Travel and fail-safe features for data recovery and integrity.

Discuss the advantages and potential challenges of using Snowflake in a multi-cloud environment.

Advantages include:

- Flexibility to choose the best cloud provider for specific needs.

- Redundancy and high availability.

- Avoidance of vendor lock-in.

- Potential challenges include:

- Complexity in managing multi-cloud architectures.

- Data consistency and synchronization issues.

- Potentially higher costs due to cross-cloud data transfers.

These questions aim to evaluate a candidate’s deep understanding of Snowflake and their ability to apply this knowledge in real-world scenarios.

SQL on Snowflake Interview Questions

SQL, as implemented by Snowflake, extends beyond traditional relational database management systems, offering enhanced capabilities particularly suited to cloud-based, large-scale data warehousing.

Snowflake’s support for ANSI SQL means that most SQL knowledge is transferable to Snowflake, but what sets Snowflake apart are its additional features designed to optimize performance, cost, and ease of use in a cloud environment. For instance, Snowflake effortlessly handles semi-structured data formats like JSON, Avro, XML, and Parquet directly within SQL queries, allowing for seamless integration of structured and semi-structured data without the need for external preprocessing.

One critical aspect where SQL on Snowflake shines is in query performance optimization. Snowflake automatically optimizes queries to ensure efficient execution, but understanding how to write queries that leverage Snowflake’s architecture can further enhance performance. This might include utilizing clustering keys appropriately to reduce scan times or designing queries that take advantage of Snowflake’s unique caching mechanisms to minimize compute costs.

Example Interview Questions on SQL on Snowflake:

- How does Snowflake’s handling of semi-structured data differ from traditional SQL databases, and what are the benefits?

This question assesses your understanding of Snowflake’s ability to query semi-structured data using SQL and how it simplifies data analysis workflows.

- Can you demonstrate how to optimize a SQL query in Snowflake for a large dataset?

Here, interviewers look for practical knowledge in query optimization techniques, such as using WHERE clauses effectively, minimizing the use of JOINs, or leveraging Snowflake’s materialized views.

- Describe a scenario where you used window functions in Snowflake to solve a complex analytical problem.

This question aims to explore your ability to use advanced SQL features within Snowflake to perform sophisticated data analysis tasks.

- Explain how Snowflake’s architecture influences SQL query performance, particularly in the context of virtual warehouses.

The response should highlight the separation of computing and storage in Snowflake and how virtual warehouses can be scaled to manage query performance dynamically. Take a step today towards mastering SQL on Snowflake — explore SQL Data Engineer Interview Course , engage in practical exercises, and review common interview questions. Enhance your understanding and apply your knowledge with confidence.

Scenario-Based Questions

Scenario-based questions in Snowflake interviews are designed to assess a candidate’s practical problem-solving skills and their ability to apply Snowflake-specific knowledge to real-world situations. These questions often present a hypothetical challenge that a data engineer might face while working with Snowflake, testing the candidate’s understanding of Snowflake’s features, approach and capacity to innovate solutions under constraints. Some scenario-based questions along with insights into the expected thought processes and approaches:

- Scenario: Your company is experiencing slower query performance due to increased data volume and complexity. How would you utilize Snowflake to diagnose and resolve these performance issues?

Approach: This question expects you to discuss using Snowflake’s query profiler to analyze query execution plans, identify bottlenecks like table scans or join operations. Mention considering the use of clustering keys for better data organization or resizing the virtual warehouse to provide additional compute resources. The interviewer looks for an understanding of Snowflake’s performance optimization tools and techniques.

- Scenario: Imagine you are tasked with designing a data pipeline in Snowflake for a retail company that needs to integrate real-time sales data with historical sales data for immediate insights. Describe your approach.

Approach: Candidates should highlight the use of Snowflake’s stream and task features to capture and process real-time data, integrating it with historical data stored in Snowflake. Discuss creating a seamless pipeline that leverages Snowflake’s ability to handle both batch and streaming data, ensuring that data is consistently and accurately processed for real-time analytics.

- Scenario: “A financial services firm requires a secure method to share sensitive transaction data with external partners using Snowflake. What security measures would you implement to ensure data privacy and compliance?” Approach: This scenario tests knowledge of Snowflake’s secure data-sharing capabilities. You should discuss setting up secure views or secure UDFs (User-Defined Functions) that limit data access based on predefined roles and permissions. Mention the importance of using Snowflake’s dynamic data masking and row access policies to protect sensitive information while complying with regulatory requirements.

- Scenario: “You need to migrate an existing on-premises data warehouse to Snowflake without disrupting the current data analytics operations. How would you plan and execute this migration?”

Approach: Expect to outline a phased migration strategy that starts with an assessment of the current data warehouse schema and data models. Discuss the use of Snowflake’s tools for automating schema conversion and the ETL processes to move data. Highlight the importance of parallel run phases for validation, ensuring that Snowflake’s environment accurately replicates the data and workflows of the on-premises system before fully cutting over.

FAQ – Tips for Preparing for a Snowflake Interview

Q: How can I build a foundational understanding of Snowflake for the interview?

A : Start by exploring the official Snowflake documentation and resources. Understand the core concepts like architecture, storage, and compute separation. Online courses or tutorials can also offer structured learning paths.

Q: What type of SQL knowledge is necessary for a Snowflake interview?

A: You should be proficient in ANSI SQL with a focus on functions and operations unique to Snowflake. Practice writing queries for both structured and semi-structured data types.

Q: Are real-world scenarios important in Snowflake interviews?

A: Yes, being able to apply Snowflake concepts to solve real-world problems is key. Familiarize yourself with case studies or practical scenarios where Snowflake’s solutions are implemented.

Q: Should I practice using Snowflake’s platform before the interview?

A: Absolutely. Hands-on experience is invaluable. Use Snowflake’s free trial to practice creating databases, loading data, and writing queries.

Q: What’s the best way to understand Snowflake’s performance optimization?

A: Dive into Snowflake’s query optimization features like clustering keys and warehouse sizing. Practice optimizing queries and understand how different factors affect query performance.

Q: How important is knowledge of data warehousing concepts for Snowflake interviews?

A : Very important. Solidify your understanding of data warehousing principles as they are foundational to how Snowflake operates.

Q: How can I prepare for the system design aspects of the interview?

A: Review system design principles with an emphasis on data systems and database design. Be prepared to discuss design decisions within the context of Snowflake.

Q: How do I stay updated on the latest features of Snowflake?

A: Follow Snowflake’s official blog, community forums, and recent product releases. Being current with the latest updates can show interviewers your dedication to staying informed.

Q: Will practicing LeetCode problems help me for a Snowflake interview?

A: Yes, while Snowflake may add its own twist to problems, LeetCode can help sharpen your problem-solving skills, especially for algorithmic and SQL questions.

Q:. Any tips for the behavioral part of the Snowflake interview?

A : Reflect on your past experiences with teamwork, problem-solving, and project management. Prepare to discuss how you’ve approached challenges and learned from them.

With the transformative journey of DE Academy’s Data Engineering Career Path, you’ve gained practical expertise and crafted a portfolio through real-world, end-to-end project experiences. Now, it’s time to put those skills into action.

What are you waiting for? Start Coding Now! DE Academy has curated courses designed to propel you towards landing your dream job. And if you need personalized guidance, our one-on-one coaching is just an email away at [email protected] .

Dive into the depths of data pipelines and emerge ready to take on the analytics world. Your future as a data engineer is waiting.

Start For Free with DE Academy and transform your passion for data into a rewarding career.

Chris Garzon

Christopher Garzon has worked as a data engineer for Amazon, Lyft, and an asset management start up where he was responsible for building the entire Data Infrastructure from scratch. He is the author “Ace the Data Engineer Interview” and has helped 100’s of students break into the data engineer industry. He is also an angel investor, an advisor to multiple to multiple start ups, and the founder and CEO of Data Engineer Academy.

Related Articles

Snowflake Integration: Complete Guide

Snowflake, a powerful cloud-based data platform, stands at the forefront of modern data warehousing. This article serves as your roadmap to mastering Snowflake integration. We will start by laying the foundation, understanding the core concepts, and gradually progressing to advanced scenarios. Understanding Snowflake Integration Before diving into integration, it’s crucial to grasp Snowflake’s role in...

Importing Snowflake modules Python as layers in AWS Lambda

AWS Lambda has revolutionized cloud computing, empowering developers to build scalable applications without server management. Snowflake, a powerful cloud-based data warehousing solution, excels in handling large datasets for data engineers and analysts.When combining the strengths of AWS Lambda and Snowflake, developers can create dynamic data-driven applications that leverage the power of serverless computing with the...

The Ultimate Guide for Snowflake Interview Questions

- May 29, 2024

Prepare for your next career leap with our comprehensive guide to acing Snowflake interview questions and answers. Dive into a curated collection of top-notch inquiries and expertly crafted responses tailored to showcase your proficiency in this cutting-edge data warehousing technology. Whether you’re a seasoned professional or just starting your journey, this article equips you with the insights and strategies needed to impress recruiters and land your dream job.

What is Snowflake?

Top 50 snowflake interview questions.

Snowflake is a cloud-based data warehousing platform that allows organizations to store and analyze large amounts of data. It provides scalable storage, powerful computing capabilities, and tools to easily manage and process data. Unlike traditional data warehouses, Snowflake separates storage and computing, making it flexible and cost-effective. It also supports real-time data sharing and collaboration, making it easier for teams to work together on data projects.

Q1. What do you mean by Horizontal and Vertical Scaling? Ans: Horizontal scaling involves adding more machines or nodes to a system to distribute load and increase capacity. It’s like adding more lanes to a highway to accommodate more traffic. Vertical scaling, on the other hand, involves increasing the resources (CPU, RAM, etc.) of an existing machine to handle more load. It’s akin to upgrading a car’s engine to make it faster.

- Horizontal Scaling: Adding more servers to a web application cluster to handle increased user traffic during peak hours.

- Vertical Scaling: Upgrading a database server’s RAM and CPU to improve its performance when handling large datasets.

Q2. How is data stored in Snowflake? Explain Columnar Database? Ans: Snowflake stores data in a columnar format, which means that each column of a table is stored separately rather than storing entire rows together. This enables more efficient data retrieval, especially for analytical queries that typically involve aggregating or analyzing data across columns rather than entire rows. Columnar databases are optimized for read-heavy workloads and can significantly reduce I/O operations by only accessing the columns needed for a query.

Example: Consider a table with columns for “Product ID,” “Product Name,” “Price,” and “Quantity.” In a columnar database like Snowflake, the values for each column would be stored separately, allowing for efficient querying based on specific columns (e.g., finding the total sales revenue without needing to access the product names).

Q3. What are micro-partitions in Snowflake, and what is its contribution to the platform’s data storage efficiency? Ans: Micro-partitions are the fundamental storage units in Snowflake, consisting of immutable and compressed data files that are typically between 50MB to 500MB in size. These micro-partitions contain a subset of rows from a table and are stored in cloud storage. They enable efficient pruning of data during query execution by allowing Snowflake to read only the relevant micro-partitions, reducing the amount of data scanned for each query. This architecture contributes to Snowflake’s data storage efficiency by minimizing storage overhead and optimizing query performance.

Example: Imagine a large sales table partitioned by date. Each day’s data is stored in micro-partitions, allowing Snowflake to quickly identify and access the relevant partitions when querying for sales data from a specific date range.

Q4. Explain stages in Snowflake? Ans: In Snowflake, stages are external locations used for data loading and unloading operations. There are two types of stages: internal stages, which are managed by Snowflake and reside within the Snowflake environment, and external stages, which are hosted outside of Snowflake, typically in cloud storage services like Amazon S3 or Azure Blob Storage. Stages serve as intermediate storage locations for data files during the ingestion process, providing a secure and efficient way to transfer data between Snowflake and external systems.

Example: An organization may use an external stage in Amazon S3 to load CSV files containing customer data into Snowflake. Snowflake can then efficiently load these files into tables using the data stored in the external stage.

Q5. What is the difference between Snowflake and Redshift? Ans: Snowflake and Redshift are both cloud-based data warehouses, but they differ in several key aspects:

- Architecture: Snowflake follows a multi-cluster shared data architecture, where compute and storage are separate, allowing for elastic scaling and better concurrency. Redshift, on the other hand, relies on a single-cluster architecture where compute and storage are tightly coupled.

- Concurrency: Snowflake offers better concurrency with its multi-cluster architecture, enabling multiple users to run queries simultaneously without contention. Redshift’s single-cluster architecture can lead to performance bottlenecks in highly concurrent environments.

- Management Overhead: Snowflake abstracts much of the management overhead, such as infrastructure provisioning and scaling, from users, making it easier to use. Redshift requires more manual management of clusters and scaling.

- Pricing Model: Snowflake’s pricing is based on storage and compute usage separately, offering more flexibility and cost efficiency for varying workloads. Redshift’s pricing is primarily based on the type and number of clusters provisioned.

Example: A company with fluctuating query workloads may prefer Snowflake for its ability to scale compute resources independently from storage, reducing costs during periods of low activity.

Q6. Explain Snowpipe? Ans: Snowpipe is a feature in Snowflake that enables continuous, automated data ingestion from external sources into Snowflake tables. It eliminates the need for manual intervention in loading data by automatically ingesting new files as they are added to designated stages. Snowpipe provides real-time or near-real-time data ingestion, making it suitable for streaming data scenarios where fresh data needs to be quickly available for analysis.

Example: A retail company uses Snowpipe to ingest streaming sales data from online transactions into a Snowflake table in real time. As new sales data arrives in the designated stage, Snowpipe automatically loads it into the target table, allowing analysts to perform near-real-time analysis on customer behavior and trends.

Q7. What is the use of the Compute layer in Snowflake? Ans: The Compute layer in Snowflake is responsible for executing SQL queries and processing data. It comprises virtual warehouses, which are clusters of compute resources provisioned on-demand to execute queries submitted by users. The Compute layer separates compute resources from storage, allowing users to independently scale compute resources based on workload requirements. This architecture enables Snowflake to handle concurrent queries efficiently and provide consistent performance across varying workloads.

Example: During peak business hours, a company can dynamically scale up the compute resources allocated to its virtual warehouse in Snowflake to handle increased query loads from analysts running complex analytics queries. After the peak period, the compute resources can be scaled down to reduce costs.

Q8. What is Data Retention Period in Snowflake? Ans: Data Retention Period in Snowflake refers to the duration for which historical data versions are retained in the system. Snowflake offers two types of data retention: Time Travel and Fail-safe. Time Travel allows users to access historical versions of data for a specified period, while Fail-safe ensures data durability by retaining deleted data and protecting against accidental data loss. The retention period can be configured by administrators based on compliance requirements and data retention policies.

Example: If the Data Retention Period is set to 30 days, users can query historical data versions or recover accidentally deleted data up to 30 days in the past using Time Travel or Fail-safe features in Snowflake.

Q9. How does Snowflake handle complex data transformation tasks involving semi-structured or unstructured data formats? Ans: Snowflake provides native support for semi-structured data formats such as JSON, Avro, XML, and Parquet through its VARIANT data type. Users can store semi-structured data directly in Snowflake tables and query it using SQL without requiring preprocessing or schema modifications. Snowflake also offers built-in functions and extensions for parsing and manipulating semi-structured data, enabling complex data transformation tasks. Additionally, Snowflake’s integration with external data processing frameworks like Spark and DataBricks allows users to leverage their preferred tools for advanced data transformation tasks.

Example: An e-commerce company stores product catalog data in JSON format in Snowflake tables. Analysts can use Snowflake’s JSON functions to extract specific attributes from the JSON data and perform analytics, such as analyzing sales trends for different product categories.

Q10. How does Snowflake support multi-cloud and hybrid cloud deployment strategies, and what are the considerations for implementing such architectures? Ans: Snowflake supports multi-cloud and hybrid cloud deployment strategies by decoupling compute and storage, allowing users to deploy Snowflake across multiple cloud providers or in hybrid environments seamlessly. Considerations for implementing multi-cloud and hybrid cloud architectures with Snowflake include:

- Data Residency: Ensure compliance with data residency regulations by selecting cloud regions that meet regulatory requirements for data storage and processing.

- Network Connectivity: Establish robust network connectivity between Snowflake and cloud environments to minimize latency and ensure reliable data transfer.

- Data Replication: Implement data replication mechanisms to synchronize data across cloud regions or environments for disaster recovery and high availability.

- Identity and Access Management (IAM): Configure IAM policies and permissions to manage access control and authentication across multiple cloud platforms.

- Cost Optimization: Optimize costs by leveraging cloud provider-specific pricing models and resources, such as spot instances or reserved capacity, based on workload requirements.

- Monitoring and Management: Implement centralized monitoring and management tools to oversee Snowflake deployments across multi-cloud or hybrid environments and ensure performance and availability.

Example: A multinational corporation with data residency requirements in different regions deploys Snowflake across multiple cloud providers (e.g., AWS, Azure) to comply with local data regulations while leveraging Snowflake’s unified management and analytics capabilities.

Q11. Explain Snowflake’s architecture? Ans: Snowflake’s architecture is built on a multi-cluster, shared data architecture that separates compute and storage layers. Key components of Snowflake’s architecture include:

- Storage: Data is stored in a scalable cloud storage layer, such as Amazon S3 or Azure Blob Storage, in micro-partitions, which are immutable and compressed data files.

- Compute: Virtual warehouses provision compute resources on-demand to execute SQL queries and process data. Compute resources are decoupled from storage, allowing for elastic scaling and better concurrency.

- Services: Snowflake services orchestrate query processing, metadata management, security, and access control. These services are globally distributed for high availability and fault tolerance.

- Metadata: Metadata services manage schema information, query optimization, transaction management, and data lineage. Metadata is stored separately from user data to ensure scalability and performance.

- Query Processing: SQL queries submitted by users are optimized and executed by Snowflake’s query processing engine. Query optimization techniques, such as cost-based optimization and query compilation, ensure efficient execution.

Example: When a user submits a SQL query to retrieve sales data from a Snowflake table, the query is parsed, optimized, and executed by Snowflake’s query processing engine using compute resources allocated from a virtual warehouse. Data is retrieved from micro-partitions stored in cloud storage, and query results are returned to the user.

Q12. Explain Snowflake Time travel and Data Retention Period? Ans: Snowflake Time Travel allows users to access historical versions of data within a specified time window, typically ranging from 0 to 90 days. Time Travel works by retaining historical data versions using a transaction log and allows users to query data as it existed at specific points in time. Data Retention Period, on the other hand, defines the duration for which historical data versions are retained in Snowflake. It includes both Time Travel and Fail-safe retention policies and can be configured by administrators based on compliance requirements and data retention policies.

Example: If the Data Retention Period is set to 30 days, users can query historical data versions using Time Travel for any changes made within the past 30 days. Beyond this period, historical data versions are purged from Snowflake unless retained for Fail-safe purposes.

Q13. What are the different ways to access the Snowflake Cloud data warehouse? Ans: Snowflake provides multiple ways to access its cloud data warehouse, including:

- Web Interface: Snowflake’s web interface, known as the Snowflake UI, allows users to interact with the data warehouse using a web browser. It provides a graphical user interface for executing SQL queries, managing objects, monitoring performance, and administering the Snowflake environment.

- SQL Clients: Users can connect to Snowflake using SQL clients such as SQL Workbench/J, DBeaver, or JetBrains DataGrip. These clients offer advanced SQL editing capabilities, query execution, and result visualization.

- Programming Interfaces: Snowflake supports programming interfaces for accessing the data warehouse programmatically, including JDBC, ODBC, Python, JavaScript, and REST APIs. These interfaces enable integration with third-party applications, ETL tools, and custom scripts.

- Business Intelligence (BI) Tools: Snowflake integrates with popular BI tools such as Tableau, Power BI, and Looker, allowing users to create interactive dashboards, reports, and visualizations based on Snowflake data.

- Data Integration Platforms: Snowflake provides connectors and integration with data integration platforms such as Informatica, Talend, and Matillion for seamless data integration, transformation, and loading (ETL) workflows.

Example: An analyst uses SQL Workbench/J to connect to Snowflake and execute SQL queries for ad-hoc analysis. Meanwhile, a data engineer uses Python scripts leveraging Snowflake’s Python connector to automate data loading and transformation tasks.

Q14. Can you explain Snowflake’s role in data storage? Ans: Snowflake serves as a cloud-based data storage solution, providing scalable and reliable storage for structured and semi-structured data. Data in Snowflake is stored in a columnar format in cloud storage, such as Amazon S3 or Azure Blob Storage, using micro-partitions. Snowflake’s storage architecture separates compute and storage layers, allowing users to independently scale compute resources based on workload requirements without impacting data storage. Additionally, Snowflake provides features for data retention, versioning, and disaster recovery to ensure data durability and availability.

Example: An e-commerce company stores its transactional data, customer information, and product catalog in Snowflake tables, leveraging Snowflake’s scalable storage infrastructure for efficient data management and analytics.

Q15. Explain how data compression works in Snowflake and write its advantages? Ans: Data compression in Snowflake reduces the storage footprint of data by encoding and compacting columnar data using compression algorithms such as run-length encoding (RLE), dictionary encoding, and delta encoding. Snowflake automatically applies compression techniques based on data characteristics and query patterns to minimize storage usage and improve query performance. The advantages of data compression in Snowflake include:

- Reduced Storage Costs: Compression reduces the amount of storage required for data, resulting in lower storage costs, especially for large datasets.

- Improved Query Performance: Smaller data footprint and reduced I/O operations lead to faster query execution times and improved performance for analytical workloads.

- Efficient Data Transfer: Compressed data requires less bandwidth for data transfer between Snowflake and cloud storage, resulting in faster data loading and unloading operations.

- Scalability: Compression enables Snowflake to efficiently store and process large volumes of data, supporting scalability for growing datasets and workloads.

Example: By applying compression to a sales table containing millions of rows, Snowflake reduces the storage footprint by encoding repetitive values, leading to significant cost savings and improved query performance for analytical queries.

Q16. What are Snowflake views? Ans: Snowflake views are virtual representations of data stored in Snowflake tables that encapsulate SQL queries. Views allow users to define customized data subsets, transformations, and aggregations without modifying underlying table structures. Snowflake supports two types of views: standard views and materialized views. Standard views execute the underlying SQL query dynamically each time they are queried, while materialized views precompute and cache query results for improved performance.

Example: An analyst creates a view in Snowflake that filters and aggregates sales data from multiple tables to generate a monthly sales report. The view’s SQL query calculates total sales revenue, average order value, and other metrics, providing users with a simplified and consistent view of sales performance without accessing raw data directly.

Q17. What do you mean by zero-copy cloning in Snowflake? Ans: Zero-copy cloning in Snowflake is a feature that enables the rapid creation of new data objects, such as tables or databases, without physically duplicating the underlying data. Instead of making copies of data blocks, Snowflake creates metadata pointers that reference the original data, allowing multiple objects to share the same underlying data blocks. This approach eliminates the need to consume additional storage space and reduces the time and resources required to create new data objects.

Example: Suppose you have a large table containing historical sales data in Snowflake. By using zero-copy cloning, you can create a new table that references the same underlying data blocks as the original table. Any changes made to the original table or the cloned table will not affect the shared data blocks, ensuring data consistency and minimizing storage overhead.

Q18. Explain in short about Snowflake Clustering? Ans: Snowflake Clustering is a performance optimization technique that organizes data within tables based on one or more clustering keys. Clustering keys determine the physical order of data within micro-partitions, optimizing data retrieval for queries that filter or join on clustering key columns. By clustering data based on common query patterns, Snowflake improves query performance by minimizing the amount of data scanned and reducing disk I/O operations.

Example: For a sales table, clustering data based on the “Order Date” column can improve query performance for time-based analyses, such as monthly sales reports or trend analysis. Snowflake automatically maintains the clustering order as new data is inserted or updated, ensuring consistent performance over time.

Q19. Can you explain the role of metadata management in Snowflake and how it contributes to data governance and lineage tracking? Ans: Metadata management in Snowflake involves capturing and storing metadata information about database objects, schemas, queries, and user activities. Metadata enables data governance by providing visibility into data lineage, usage, and access patterns, facilitating compliance with regulatory requirements and internal policies. With metadata, administrators can track data provenance, understand data dependencies, and enforce access controls, ensuring data integrity and security.

Example: A compliance officer uses Snowflake’s metadata to trace the lineage of sensitive customer data from its source to downstream analytics reports. By analyzing metadata, the officer can identify data transformations, access permissions, and audit trails, ensuring compliance with data privacy regulations.

Q20. How does Snowflake handle concurrency and resource contention in a multi-tenant environment, and what strategies can be employed to mitigate potential performance issues? Ans: Snowflake uses a multi-cluster, shared data architecture to handle concurrency and resource contention in a multi-tenant environment. Each user or workload is assigned a separate virtual warehouse with dedicated compute resources, ensuring isolation and performance predictability. Snowflake dynamically allocates resources based on workload priorities, optimizing resource utilization and minimizing contention. To mitigate potential performance issues, users can employ strategies such as workload management, resource monitoring, and query optimization techniques.

Example: In a multi-tenant environment, Snowflake automatically scales compute resources for different workloads based on their resource requirements and priorities. By using workload management policies to prioritize critical workloads and allocate resources efficiently, users can ensure consistent performance and minimize contention for shared resources.

Q21. Explain Snowflake caching and write its type? Ans: Snowflake caching is a performance optimization technique that stores frequently accessed data in memory to reduce query latency and improve query performance. Snowflake supports two types of caching:

- Result Caching: Snowflake caches query results in memory for reuse when the same query is executed multiple times within a short time window. Result caching eliminates the need to recompute query results, reducing processing time and resource consumption.

- Metadata Caching: Snowflake caches metadata information, such as table schemas, column statistics, and query execution plans, to expedite query optimization and planning. Metadata caching improves query performance by reducing metadata retrieval latency and optimizing query execution.

Example: When a user executes a complex analytical query against a large dataset, Snowflake caches the query results in memory after the first execution. Subsequent executions of the same query benefit from result caching, resulting in faster response times and improved user experience.

Q22. What is Snowflake Computing? Ans: Snowflake Computing is a cloud-based data warehousing platform that provides scalable, elastic, and fully managed data storage and analytics services. Snowflake enables organizations to store, manage, and analyze structured and semi-structured data in a centralized and scalable environment without the need for infrastructure provisioning or management. Snowflake’s architecture separates compute and storage layers, allowing users to independently scale resources based on workload requirements. With features such as automatic scaling, data sharing, and native support for diverse data formats, Snowflake offers a modern data warehousing solution for organizations of all sizes.

Example: A retail company migrates its on-premises data warehouse to Snowflake Computing to leverage its cloud-native architecture and scalability for analyzing sales data, customer behavior, and inventory management in real time.

Q23. Can you discuss the role of automatic query optimization in Snowflake and how it adapts to evolving data workloads over time? Ans: Snowflake’s automatic query optimization leverages cost-based optimization techniques to generate efficient query execution plans based on data statistics, query complexity, and resource availability. Snowflake analyzes query patterns and usage statistics to dynamically adjust query execution strategies and resource allocation, ensuring optimal performance for evolving data workloads. By continuously monitoring and optimizing query execution, Snowflake adapts to changing data volumes, query patterns, and user requirements, delivering consistent performance and scalability.

Example: As a retail company’s sales data grows over time, Snowflake’s automatic query optimization identifies and implements efficient execution plans for complex analytical queries, such as sales forecasting and inventory optimization. By adapting to evolving data workloads, Snowflake ensures timely and accurate insights for business decision-making.

Q24. Is Snowflake OLTP or OLAP? Ans: Snowflake is primarily an OLAP (Online Analytical Processing) platform designed for complex analytics, reporting, and data visualization tasks. It is optimized for handling large volumes of structured and semi-structured data and executing complex SQL queries for business intelligence and data analytics purposes. While Snowflake supports some OLTP (Online Transaction Processing) capabilities, such as data ingestion and real-time data analytics, its architecture and feature set are geared towards OLAP workloads.

Example: A financial services company uses Snowflake to analyze historical trading data, conduct risk modeling, and generate regulatory reports for compliance purposes. These OLAP workloads involve complex queries and aggregations across large datasets, making Snowflake an ideal choice for analytical processing.

Q25. What are different Snowflake editions? Ans: Snowflake offers several editions tailored to the needs of different organizations and use cases:

- Standard Edition: Suitable for small to mid-sized organizations with basic data warehousing requirements, offering standard features for data storage, processing, and analytics.

- Enterprise Edition: Designed for large enterprises and organizations with advanced data warehousing needs, providing enhanced scalability, security, and performance features, such as multi-cluster warehouses, data sharing, and role-based access control.

- Business Critical Edition: Targeted at mission-critical workloads and high-performance analytics applications, offering advanced features for data replication, disaster recovery, and continuous availability to ensure business continuity and data integrity.

- Virtual Private Snowflake (VPS): Provides dedicated infrastructure and resources for organizations requiring isolated environments, enhanced security controls, and customizable configurations to meet specific compliance and regulatory requirements.

Example: A multinational corporation opts for the Enterprise Edition of Snowflake to support its complex data warehousing and analytics needs, including multi-cluster warehouses for scalable query processing, data sharing for collaboration with external partners, and role-based access control for fine-grained security management.

Q26. What is the best way to remove a string that is an anagram of an earlier string from an array? Ans: To remove a string that is an anagram of an earlier string from an array, you can follow these steps:

- Iterate through the array and store the sorted version of each string along with its original index.

- Compare each string with its predecessors to identify anagrams.

- If an anagram is found, remove the string from the array.

- Return the modified array without the anagram strings.

Example (in Python):

This code snippet removes anagrams of earlier strings in the array, preserving the order of unique strings. It uses a sorted representation of strings to efficiently identify anagrams and removes them from the array.

Q27. Does Snowflake maintain stored procedures? Ans: Yes, Snowflake supports stored procedures, which are named blocks of SQL statements stored in the database catalog and executed on demand. Stored procedures in Snowflake enable encapsulation of complex logic, reusable code, and transaction management within the database environment. Users can create, modify, and execute stored procedures using SQL or Snowflake’s programming interfaces. Snowflake also provides features such as input/output parameters, exception handling, and transaction control statements to enhance the functionality and flexibility of stored procedures.

Example: A data engineer creates a stored procedure in Snowflake to automate data loading, transformation, and validation tasks for a daily ETL pipeline. The stored procedure encapsulates the logic for extracting data from source systems, applying business rules, and loading cleansed data into target tables, providing a streamlined and reusable solution for data processing.

Q28. What is the use of Snowflake Connectors? Ans: Snowflake Connectors are software components that facilitate seamless integration between Snowflake and external systems, applications, and data sources. Snowflake provides a variety of connectors for different use cases, including:

- JDBC and ODBC Connectors: Enable connectivity to Snowflake from a wide range of programming languages, applications, and BI tools using industry-standard JDBC and ODBC protocols.

- Python Connector: Allows Python applications to interact with Snowflake databases, execute SQL queries, and load data using a native Python interface.

- Spark Connector: Integrates Snowflake with Apache Spark, enabling data exchange and processing between Spark dataframes and Snowflake tables for distributed data analytics and machine learning workflows.

- Kafka Connector: Facilitates real-time data ingestion from Apache Kafka into Snowflake for streaming analytics, event processing, and data warehousing applications.

- Data Integration Connectors: Provides pre-built connectors for popular data integration platforms such as Informatica, Talend, and Matillion, simplifying data integration, ETL, and ELT workflows between Snowflake and other data sources.

Example: A data engineer uses the Snowflake JDBC Connector to establish a connection between a Java application and Snowflake database, enabling the application to query and manipulate data stored in Snowflake tables using JDBC API calls.

Q29. Can you explain how Snowflake differs from AWS (Amazon Web Service)? Ans: Snowflake is a cloud-based data warehousing platform, while AWS (Amazon Web Services) is a comprehensive cloud computing platform that offers a wide range of infrastructure, storage, and application services. While Snowflake can be deployed on AWS infrastructure, it differs from AWS in several key aspects:

- Service Focus: Snowflake is primarily focused on providing data warehousing and analytics services, whereas AWS offers a broad portfolio of cloud services, including computing, storage, networking, databases, machine learning, and IoT.

- Managed Service: Snowflake is a fully managed service, meaning that infrastructure provisioning, configuration, maintenance, and scaling are handled by Snowflake, allowing users to focus on data analytics and insights. In contrast, AWS offers a mix of managed and self-managed services, requiring users to manage infrastructure and resources to varying degrees.

- Architecture: Snowflake follows a multi-cluster, shared data architecture that separates compute and storage layers, providing scalability, concurrency, and performance optimization for analytical workloads. AWS offers diverse compute and storage services, such as EC2, S3, and Redshift, which can be integrated to build custom data processing and analytics solutions.

- Pricing Model: Snowflake’s pricing model is based on usage metrics such as compute resources and storage capacity, with separate charges for compute and storage. AWS employs a pay-as-you-go pricing model, where users pay for the resources consumed, including compute instances, storage volumes, and data transfer.