Traveling salesman problem

This web page is a duplicate of https://optimization.mccormick.northwestern.edu/index.php/Traveling_salesman_problems

Author: Jessica Yu (ChE 345 Spring 2014)

Steward: Dajun Yue, Fengqi You

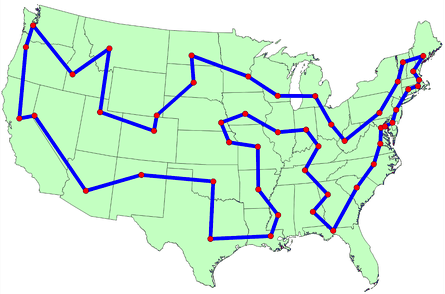

The traveling salesman problem (TSP) is a widely studied combinatorial optimization problem, which, given a set of cities and a cost to travel from one city to another, seeks to identify the tour that will allow a salesman to visit each city only once, starting and ending in the same city, at the minimum cost. 1

- 2.1 Graph Theory

- 2.2 Classifications of the TSP

- 2.3 Variations of the TSP

- 3.1 aTSP ILP Formulation

- 3.2 sTSP ILP Formulation

- 4.1 Exact algorithms

- 4.2.1 Tour construction procedures

- 4.2.2 Tour improvement procedures

- 5 Applications

- 7 References

The origins of the traveling salesman problem are obscure; it is mentioned in an 1832 manual for traveling salesman, which included example tours of 45 German cities but gave no mathematical consideration. 2 W. R. Hamilton and Thomas Kirkman devised mathematical formulations of the problem in the 1800s. 2

It is believed that the general form was first studied by Karl Menger in Vienna and Harvard in the 1930s. 2,3

Hassler Whitney, who was working on his Ph.D. research at Harvard when Menger was a visiting lecturer, is believed to have posed the problem of finding the shortest route between the 48 states of the United States during either his 1931-1932 or 1934 seminar talks. 2 There is also uncertainty surrounding the individual who coined the name “traveling salesman problem” for Whitney’s problem. 2

The problem became increasingly popular in the 1950s and 1960s. Notably, George Dantzig, Delber R. Fulkerson, and Selmer M. Johnson at the RAND Corporation in Santa Monica, California solved the 48 state problem by formulating it as a linear programming problem. 2 The methods described in the paper set the foundation for future work in combinatorial optimization, especially highlighting the importance of cutting planes. 2,4

In the early 1970s, the concept of P vs. NP problems created buzz in the theoretical computer science community. In 1972, Richard Karp demonstrated that the Hamiltonian cycle problem was NP-complete, implying that the traveling salesman problem was NP-hard. 4

Increasingly sophisticated codes led to rapid increases in the sizes of the traveling salesman problems solved. Dantzig, Fulkerson, and Johnson had solved a 48 city instance of the problem in 1954. 5 Martin Grötechel more than doubled this 23 years later, solving a 120 city instance in 1977. 5 Enoch Crowder and Manfred W. Padberg again more than doubled this in just 3 years, with a 318 city solution. 5

In 1987, rapid improvements were made, culminating in a 2,392 city solution by Padberg and Giovanni Rinaldi. In the following two decades, David L. Appelgate, Robert E. Bixby, Vasek Chvátal, & William J. Cook led the cutting edge, solving a 7,397 city instance in 1994 up to the current largest solved problem of 24,978 cities in 2004. 5

Description

Graph theory.

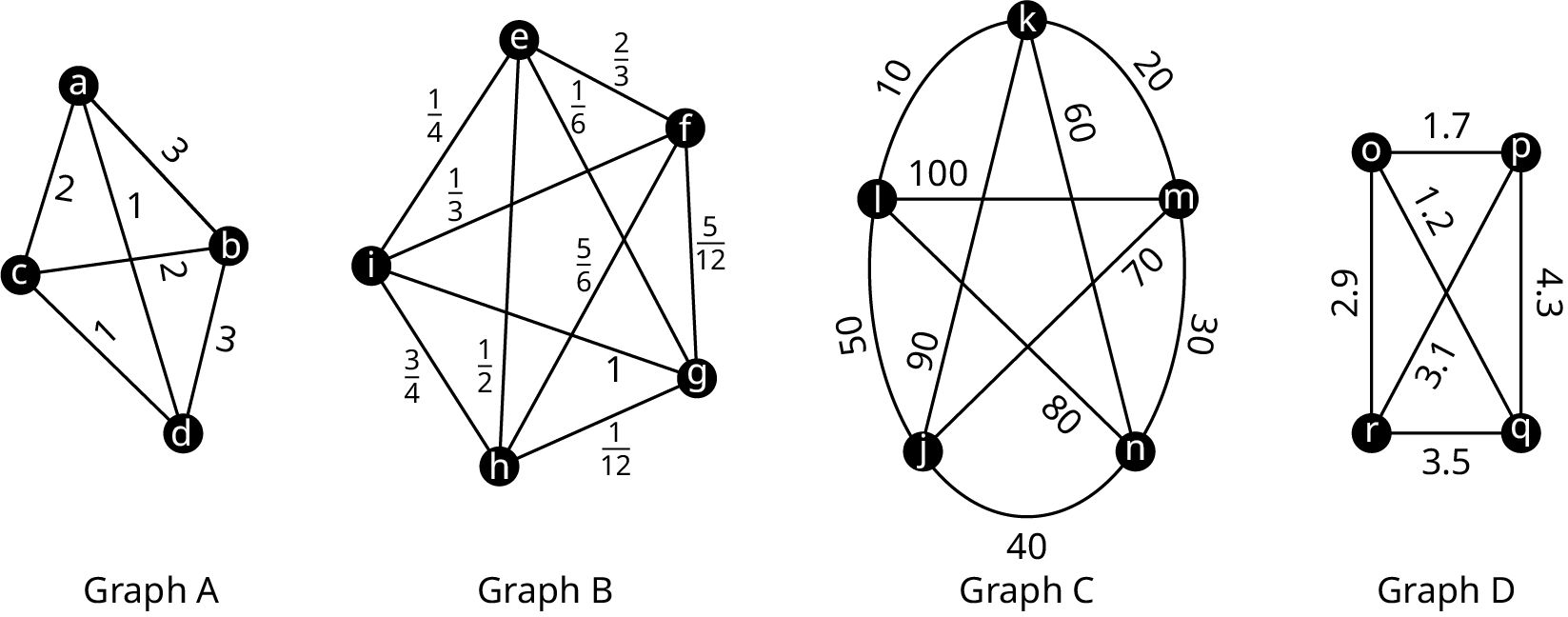

In the context of the traveling salesman problem, the verticies correspond to cities and the edges correspond to the path between those cities. When modeled as a complete graph, paths that do not exist between cities can be modeled as edges of very large cost without loss of generality. 6 Minimizing the sum of the costs for Hamiltonian cycle is equivalent to identifying the shortest path in which each city is visiting only once.

Classifications of the TSP

The TRP can be divided into two classes depending on the nature of the cost matrix. 3,6

- Applies when the distance between cities is the same in both directions

- Applies when there are differences in distances (e.g. one-way streets)

An ATSP can be formulated as an STSP by doubling the number of nodes. 6

Variations of the TSP

Formulation

The objective function is then given by

To ensure that the result is a valid tour, several contraints must be added. 1,3

There are several other formulations for the subtour elimnation contraint, including circuit packing contraints, MTZ constraints, and network flow constraints.

aTSP ILP Formulation

The integer linear programming formulation for an aTSP is given by

sTSP ILP Formulation

The symmetric case is a special case of the asymmetric case and the above formulation is valid. 3, 6 The integer linear programming formulation for an sTSP is given by

Exact algorithms

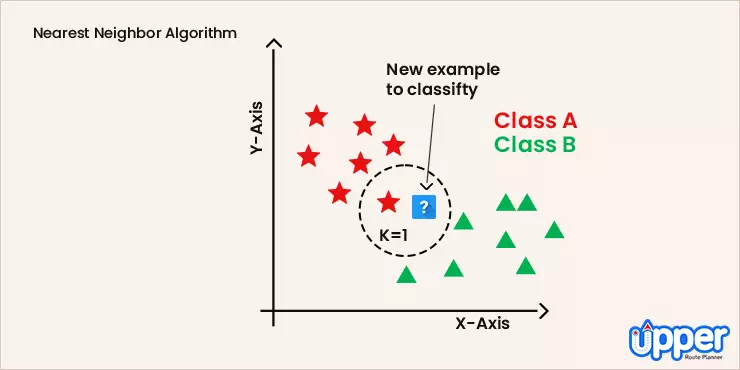

Branch-and-bound algorithms are commonly used to find solutions for TSPs. 7 The ILP is first relaxed and solved as an LP using the Simplex method, then feasibility is regained by enumeration of the integer variables. 7

Other exact solution methods include the cutting plane method and branch-and-cut. 8

Heuristic algorithms

Given that the TSP is an NP-hard problem, heuristic algorithms are commonly used to give a approximate solutions that are good, though not necessarily optimal. The algorithms do not guarantee an optimal solution, but gives near-optimal solutions in reasonable computational time. 3 The Held-Karp lower bound can be calculated and used to judge the performance of a heuristic algorithm. 3

There are two general heuristic classifications 7 :

- Tour construction procedures where a solution is gradually built by adding a new vertex at each step

- Tour improvement procedures where a feasbile solution is improved upon by performing various exchanges

The best methods tend to be composite algorithms that combine these features. 7

Tour construction procedures

Tour improvement procedures

Applications

The importance of the traveling salesman problem is two fold. First its ubiquity as a platform for the study of general methods than can then be applied to a variety of other discrete optimization problems. 5 Second is its diverse range of applications, in fields including mathematics, computer science, genetics, and engineering. 5,6

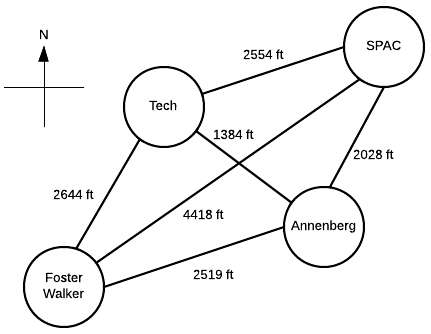

Suppose a Northwestern student, who lives in Foster-Walker , has to accomplish the following tasks:

- Drop off a homework set at Tech

- Work out a SPAC

- Complete a group project at Annenberg

Distances between buildings can be found using Google Maps. Note that there is particularly strong western wind and walking east takes 1.5 times as long.

It is the middle of winter and the student wants to spend the least possible time walking. Determine the path the student should take in order to minimize walking time, starting and ending at Foster-Walker.

Start with the cost matrix (with altered distances taken into account):

Method 1: Complete Enumeration

All possible paths are considered and the path of least cost is the optimal solution. Note that this method is only feasible given the small size of the problem.

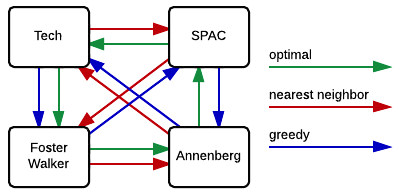

From inspection, we see that Path 4 is the shortest. So, the student should walk 2.28 miles in the following order: Foster-Walker → Annenberg → SPAC → Tech → Foster-Walker

Method 2: Nearest neighbor

Starting from Foster-Walker, the next building is simply the closest building that has not yet been visited. With only four nodes, this can be done by inspection:

- Smallest distance is from Foster-Walker is to Annenberg

- Smallest distance from Annenberg is to Tech

- Smallest distance from Tech is to Annenberg ( creates a subtour, therefore skip )

- Next smallest distance from Tech is to Foster-Walker ( creates a subtour, therefore skip )

- Next smallest distance from Tech is to SPAC

- Smallest distance from SPAC is to Annenberg ( creates a subtour, therefore skip )

- Next smallest distance from SPAC is to Tech ( creates a subtour, therefore skip )

- Next smallest distance from SPAC is to Foster-Walker

So, the student would walk 2.54 miles in the following order: Foster-Walker → Annenberg → Tech → SPAC → Foster-Walker

Method 3: Greedy

With this method, the shortest paths that do not create a subtour are selected until a complete tour is created.

- Smallest distance is Annenberg → Tech

- Next smallest is SPAC → Annenberg

- Next smallest is Tech → Annenberg ( creates a subtour, therefore skip )

- Next smallest is Anneberg → Foster-Walker ( creates a subtour, therefore skip )

- Next smallest is SPAC → Tech ( creates a subtour, therefore skip )

- Next smallest is Tech → Foster-Walker

- Next smallest is Annenberg → SPAC ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → Annenberg ( creates a subtour, therefore skip )

- Next smallest is Tech → SPAC ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → Tech ( creates a subtour, therefore skip )

- Next smallest is SPAC → Foster-Walker ( creates a subtour, therefore skip )

- Next smallest is Foster-Walker → SPAC

So, the student would walk 2.40 miles in the following order: Foster-Walker → SPAC → Annenberg → Tech → Foster-Walker

As we can see in the figure to the right, the heuristic methods did not give the optimal solution. That is not to say that heuristics can never give the optimal solution, just that it is not guaranteed.

Both the optimal and the nearest neighbor algorithms suggest that Annenberg is the optimal first building to visit. However, the optimal solution then goes to SPAC, while both heuristic methods suggest Tech. This is in part due to the large cost of SPAC → Foster-Walker. The heuristic algorithms cannot take this future cost into account, and therefore fall into that local optimum.

We note that the nearest neighbor and greedy algorithms give solutions that are 11.4% and 5.3%, respectively, above the optimal solution. In the scale of this problem, this corresponds to fractions of a mile. We also note that neither heuristic gave the worst case result, Foster-Walker → SPAC → Tech → Annenberg → Foster-Walker.

Only tour building heuristics were used. Combined with a tour improvement algorithm (such as 2-opt or simulated annealing), we imagine that we may be able to locate solutions that are closer to the optimum.

The exact algorithm used was complete enumeration, but we note that this is impractical even for 7 nodes (6! or 720 different possibilities). Commonly, the problem would be formulated and solved as an ILP to obtain exact solutions.

- Vanderbei, R. J. (2001). Linear programming: Foundations and extensions (2nd ed.). Boston: Kluwer Academic.

- Schrijver, A. (n.d.). On the history of combinatorial optimization (till 1960).

- Matai, R., Singh, S., & Lal, M. (2010). Traveling salesman problem: An overview of applications, formulations, and solution approaches. In D. Davendra (Ed.), Traveling Salesman Problem, Theory and Applications . InTech.

- Junger, M., Liebling, T., Naddef, D., Nemhauser, G., Pulleyblank, W., Reinelt, G., Rinaldi, G., & Wolsey, L. (Eds.). (2009). 50 years of integer programming, 1958-2008: The early years and state-of-the-art surveys . Heidelberg: Springer.

- Cook, W. (2007). History of the TSP. The Traveling Salesman Problem . Retrieved from http://www.math.uwaterloo.ca/tsp/history/index.htm

- Punnen, A. P. (2002). The traveling salesman problem: Applications, formulations and variations. In G. Gutin & A. P. Punnen (Eds.), The Traveling Salesman Problem and its Variations . Netherlands: Kluwer Academic Publishers.

- Laporte, G. (1992). The traveling salesman problem: An overview of exact and approximate algorithms. European Journal of Operational Research, 59 (2), 231–247.

- Goyal, S. (n.d.). A suvey on travlling salesman problem.

Navigation menu

Reset password New user? Sign up

Existing user? Log in

Traveling Salesperson Problem

Already have an account? Log in here.

A salesperson needs to visit a set of cities to sell their goods. They know how many cities they need to go to and the distances between each city. In what order should the salesperson visit each city exactly once so that they minimize their travel time and so that they end their journey in their city of origin?

The traveling salesperson problem is an extremely old problem in computer science that is an extension of the Hamiltonian Circuit Problem . It has important implications in complexity theory and the P versus NP problem because it is an NP-Complete problem . This means that a solution to this problem cannot be found in polynomial time (it takes superpolynomial time to compute an answer). In other words, as the number of vertices increases linearly, the computation time to solve the problem increases exponentially.

The following image is a simple example of a network of cities connected by edges of a specific distance. The origin city is also marked.

Network of cities

Here is the solution for that network, it has a distance traveled of only 14. Any other path that the salesman can takes will result in a path length that is more than 14.

Relationship to Graphs

Special kinds of tsp, importance for p vs np, applications.

The traveling salesperson problem can be modeled as a graph . Specifically, it is typical a directed, weighted graph. Each city acts as a vertex and each path between cities is an edge. Instead of distances, each edge has a weight associated with it. In this model, the goal of the traveling salesperson problem can be defined as finding a path that visits every vertex, returns to the original vertex, and minimizes total weight.

To that end, many graph algorithms can be used on this model. Search algorithms like breadth-first search (BFS) , depth-first search (DFS) , and Dijkstra's shortest path algorithm can certainly be used, however, they do not take into consideration that fact that every vertex must be visited.

The Traveling Salesperson Problem (TSP), an NP-Complete problem, is notoriously complicated to solve. That is because the greedy approach is so computational intensive. The greedy approach to solving this problem would be to try every single possible path and see which one is the fastest. Try this conceptual question to see if you have a grasp for how hard it is to solve.

For a fully connected map with \(n\) cities, how many total paths are possible for the traveling salesperson? Show Answer There are (n-1)! total paths the salesperson can take. The computation needed to solve this problem in this way grows far too quickly to be a reasonable solution. If this map has only 5 cities, there are \(4!\), or 24, paths. However, if the size of this map is increased to 20 cities, there will be \(1.22 \cdot 10^{17}\) paths!

The greedy approach to TSP would go like this:

- Find all possible paths.

- Find the cost of every paths.

- Choose the path with the lowest cost.

Another version of a greedy approach might be: At every step in the algorithm, choose the best possible path. This version might go a little quicker, but it's not guaranteed to find the best answer, or an answer at all since it might hit a dead end.

For NP-Hard problems (a subset of NP-Complete problems) like TSP, exact solutions can only be implemented in a reasonable amount of time for small input sizes (maps with few cities). Otherwise, the best approach we can do is provide a heuristic to help the problem move forward in an optimal way. However, these approaches cannot be proven to be optimal because they always have some sort of downside.

Small input sizes

As described, in a previous section , the greedy approach to this problem has a complexity of \(O(n!)\). However, there are some approaches that decrease this computation time.

The Held-Karp Algorithm is one of the earliest applications of dynamic programming . Its complexity is much lower than the greedy approach at \(O(n^2 2^n)\). Basically what this algorithm says is that every sub path along an optimal path is itself an optimal path. So, computing an optimal path is the same as computing many smaller subpaths and adding them together.

Heuristics are a way of ranking possible next steps in an algorithm in the hopes of cutting down computation time for the entire algorithm. They are often a tradeoff of some attribute - such as completeness, accuracy, or precision - in favor of speed. Heuristics exist for the traveling salesperson problem as well.

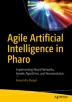

The most simple heuristic for this problem is the greedy heuristic. This heuristic simply says, at each step of the network traversal, choose the best next step. In other words, always choose the closest city that you have not yet visited. This heuristic seems like a good one because it is simple and intuitive, and it is even used in practice sometimes, however there are heuristics that are proven to be more effective.

Christofides algorithm is another heuristic. It produces at most 1.5 times the optimal weight for TSP. This algorithm involves finding a minimum spanning tree for the network. Next, it creates matchings for the cities of an odd degree (meaning they have an odd number of edges coming out of them), calculates an eulerian path , and converts back to a TSP path.

Even though it is typically impossible to optimally solve TSP problems, there are cases of TSP problems that can be solved if certain conditions hold.

The metric-TSP is an instance of TSP that satisfies this condition: The distance from city A to city B is less than or equal to the distance from city A to city C plus the distance from city C to city B. Or,

\[distance_{AB} \leq distance_{AC} + distance_{CB}\]

This is a condition that holds in the real world, but it can't always be expected to hold for every TSP problem. But, with this inequality in place, the approximated path will be no more than twice the optimal path. Even better, we can bound the solution to a \(3/2\) approximation by using Christofide's Algorithm .

The euclidean-TSP has an even stricter constraint on the TSP input. It states that all cities' edges in the network must obey euclidean distances . Recent advances have shown that approximation algorithms using euclidean minimum spanning trees have reduced the runtime of euclidean-TSP, even though they are also NP-hard. In practice, though, simpler heuristics are still used.

The P versus NP problem is one of the leading questions in modern computer science. It asks whether or not every problem whose solution can be verified in polynomial time by a computer can also be solved in polynomial time by a computer. TSP, for example, cannot be solved in polynomial time (at least that's what is currently theorized). However, TSP can be solved in polynomial time when it is phrased like this: Given a graph and an integer, x, decide if there is a path of length x or less than x . It's easy to see that given a proposed answer to this question, it is simple to check if it is less than or equal to x.

The traveling salesperson problem, like other problems that are NP-Complete, are very important to this debate. That is because if a polynomial time solution can be found to this problems, then \(P = NP\). As it stands, most scientists believe that \(P \ne NP\).

The traveling salesperson problem has many applications. The obvious ones are in the transportation space. Planning delivery routes or flight patterns, for example, would benefit immensly from breakthroughs is this problem or in the P versus NP problem .

However, this same logic can be applied to many facets of planning as well. In robotics, for instance, planning the order in which to drill holes in a circuit board is a complex task due to the sheer number of holes that must be drawn.

The best and most important application of TSP, however, comes from the fact that it is an NP-Complete problem. That means that its practical applications amount to the applications of any problem that is NP-Complete. So, if there are significant breakthroughs for TSP, that means that those exact same breakthrough can be applied to any problem in the NP-Complete class.

Problem Loading...

Note Loading...

Set Loading...

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

12.10: Traveling Salesperson Problem

- Last updated

- Save as PDF

- Page ID 129677

Learning Objectives

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths. In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.214. Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles, the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.219 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

a → b → c → d → a

a → b → d → c → a

a → c → b → d → a

a → d → c → b → a

a → c → d → b → a

a → d → b → c → a

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.220.

The possible distances are:

396 + 410 + 106 + 159 = 1071 207 + 410 + 439 + 159 = 1215 396 + 439 + 106 + 207 = 1148 396 + 410 + 106 + 159 = 1071 207 + 410 + 439 + 159 = 1215 396 + 439 + 106 + 207 = 1148

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.221 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.222.

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.221. There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.223.

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 Figure 12.224. The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 Figure 12.225. The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 Figure 12.226. The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 Figure 12.227. The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 Figure 12.228. Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.229. Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

The Traveling Salesman Problem

- First Online: 21 June 2020

Cite this chapter

- Alexandre Bergel 2

691 Accesses

1 Citations

The Traveling Salesman Problem (TSP) is a classical algorithm problem. It consists of identifying the shortest possible route between several connected cities. Not only is the problem relevant from an algorithmic point of view, but it also has many concrete applications, like microchip manufacturing, as you will shorty see.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

Santiago, Chile

Alexandre Bergel

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2020 Alexandre Bergel

About this chapter

Bergel, A. (2020). The Traveling Salesman Problem. In: Agile Artificial Intelligence in Pharo. Apress, Berkeley, CA. https://doi.org/10.1007/978-1-4842-5384-7_10

Download citation

DOI : https://doi.org/10.1007/978-1-4842-5384-7_10

Published : 21 June 2020

Publisher Name : Apress, Berkeley, CA

Print ISBN : 978-1-4842-5383-0

Online ISBN : 978-1-4842-5384-7

eBook Packages : Professional and Applied Computing Professional and Applied Computing (R0) Apress Access Books

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Explained: What is Traveling Salesman Problem (TSP)

- Last Updated: January 24, 2023

- A well-known mathematical problem called the Traveling Salesman Problem (TSP) aims to determine the shortest path between a number of places.

- Logistics, transportation, and manufacturing are just a few of the industries where the TSP is useful.

- The number of points, the form of the point set, and the algorithm employed can all have an impact on how the TSP is solved.

- Technology advancements like cloud computing and parallel processing have made it possible to solve the Traveling Salesman Problem effectively for larger and more complicated situations.

Traveling salesman problem is not new for delivery-based businesses. Its recent expansion has insisted that industry experts find optimal solutions in order to facilitate delivery operations.

The major challenge is to find the most efficient routes for performing multi-stop deliveries. Without the shortest routes, your delivery agent will take more time to reach the final destination. Sometimes problems may arise if you have multiple route options but fail to recognize the efficient one.

Eventually, traveling salesman problem would cost you time and result in late deliveries . So, before it becomes an irreparable issue for your delivery company, let us understand the traveling salesman problem and find optimal solutions in this blog.

Table of Content

- What is the Traveling Salesman Problem (TSP)?

- Commom challenges of Traveling Salesman Problem (TSP)

What are Some Popular Solutions to Traveling Salesman Problem?

What are other optimal solutions to the traveling salesman problem, what are some real-life applications of traveling salesman problem.

- How TSP and VRP Combinedly Pile up Challenges?

- Can a route planner resolve Traveling Salesman Problem (TSP)?

What is Traveling Salesman Problem (TSP)?

The traveling Salesman Problem (TSP) is a combinatorial problem that deals with finding the shortest and most efficient route to follow for reaching a list of specific destinations.

It is a common algorithmic problem in the field of delivery operations that might hamper the multiple delivery process and result in financial loss. TSP turns out when you have multiple routes available but choosing a minimum cost path is really hard for you or a traveling person.

How difficult is it to solve?

It is quite difficult to solve TSP as it is known as NP-hard, which means there is no polynomial time algorithm to solve it for more numbers of addresses. So, with an increasing amount of addresses, the complexity of solving TSP increases exponentially.

So, it is impossible to find TSP solutions manually. Also, many mathematical algorithms and the fastest computers fail to solve TSP.

However, TSP can be eliminated by determining the optimized and efficient path using approximation algorithms or automated processes.

Common Challenges of Traveling Salesman Problem (TSP)

Being a salesman is not easy, as you need to face various unavoidable challenges in your everyday schedules.

- Firstly, every day, salespeople have to carry out a number of deliveries in a very limited time, so there are a lot of time constraints. To overcome this, you need to plan your routes in a way that you make the most out of them.

- Secondly, there are chances of last-minute changes. Sometimes you get extra and urgent visits to make, while sometimes, some visits are postponed or canceled due to the customer’s unavailability.

- Lastly, a math problem, a combinatorial optimization problem, arises. A combinatorial optimization problem is a problem that is mathematically complex to solve as you have to deal with many variables.

These are major challenges in the Traveling Salesman Problem (TSP) as you are required to create a route with the shortest distances using hundreds and thousands of permutations and combinations that asks for less fuel, fulfill on-time delivery to customers, and are ready to modify routes considering last minute changes.

These are some of the near-optimal solutions to find the shortest route to a combinatorial optimization problem.

1. Nearest Neighbor (NN)

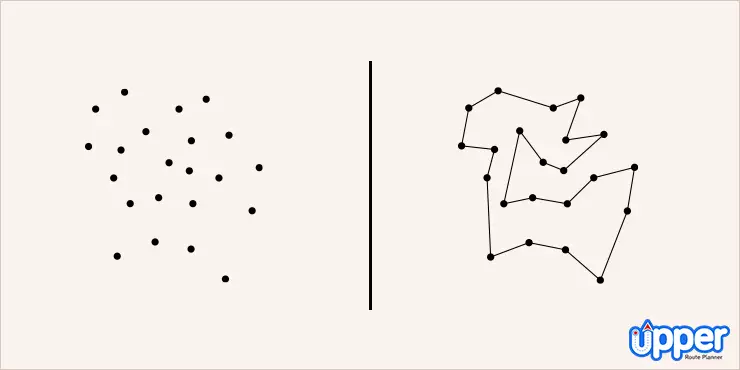

The Nearest Neighbor Method is probably the most basic TSP heuristic. The method followed by this algorithm states that the driver must start by visiting the nearest destination or closest city. Once all the cities in the loop are covered, the driver can head back to the starting point.

Solving TSP using this efficient method, requires the user to choose a city at random and then move on to the closest unvisited city and so on. Once all the cities on the map are covered, you must return to the city you started from.

2. The Branch and Bound Algorithm

The Branch & Bound method follows the technique of breaking one problem into several little chunks of problems. So it solves a series of problems. Each of these sub-problems may have multiple solutions. The solution you choose for one problem may have an effect on the solutions of subsequent sub-problems.

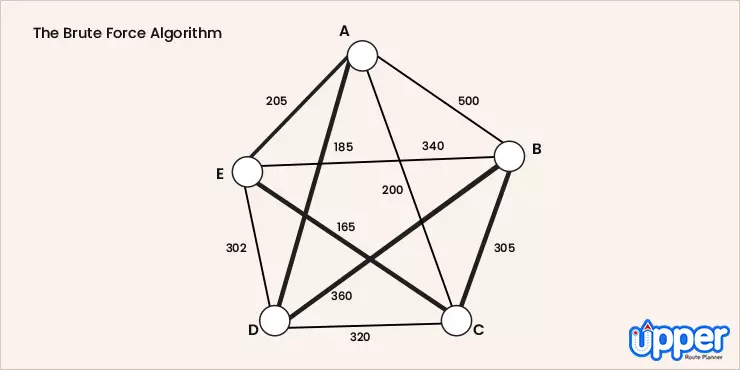

3. The Brute Force Algorithm

The Brute Force Approach takes into consideration all possible minimum cost permutation of routes using a dynamic programming approach. First, calculate the total number of routes. Draw and list all the possible routes that you get from the calculation. The distance of each route must be calculated and the shortest route will be the most optimal solution.

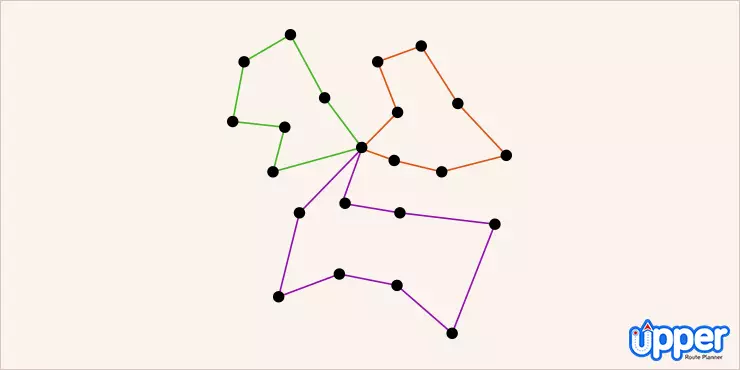

- Multi-Agent System : Involves distributing the pair of cities into groups. Then assign a single agent to discover the shortest path, covering all the cities in the assigned group.

- Zero Suffix Method : This method solves the classical symmetric TSP and was introduced by Indian researchers.

- Multi-Objective Evolutionary Algorithm : This method solves the TSP using NSGA-II

- Biogeography-based Optimization Algorithm : This method is based on the migration strategy of animals for solving optimization issues.

- Meta-Heuristic Multi Restart Iterated Local Search : This method states that the technique is more efficient compared to genetic algorithms.

Blow Away TSP using Upper

Need a permanent solution for recurring TSP? Sign up with Upper to keep your tradesmen updated all the time. Lay off your manual calculation and adopt an automated process now!

Most businesses see a rise in the Traveling Salesman Problem (TSP) due to the last mile delivery challenges . The last mile delivery is the process of delivering goods from the warehouse (or a depot) to the customer’s preferred location. Considering the supply chain management, it is the last mile deliveries that cost you a wholesome amount.

At the same time, you need to sacrifice financial loss in order to maintain your current position in the market. Suppose last mile delivery costs you $11, the customer will pay $8 and you would suffer a loss. This is because of pre-defined norms which may favor the customer to pay less amount.

This hefty last mile delivery cost is the result of a lack of Vehicle routing problem(VRP) software. VRP finds you the most efficient routes so that operational costs will not get increase. So, by using the right VRP software, you would not have to bother about TSP.

Such delivery management software uses an automated process that doesn’t need manual intervention or calculations to pick the best routes. Hence, it is the easiest way to get rid of the traveling Salesman Problem (TSP).

How TSP and VRP Combinedly Pile Up Challenges?

The traveling Salesman Problem is an optimization problem studied in graph theory and the field of operations research. In this optimization problem, the nodes or cities on the graph are all connected using direct edges or routes. The weight of each edge indicates the distance covered on the route between the two cities.

The problem is about finding an optimal route that visits each city once and returns to the starting and ending point after covering all cities once.

The TSP is often studied in a generalized version which is the Vehicle Routing Problem . It is one of the most broadly worked on problems in mathematical optimization. VRP deals with finding or creating a set of routes for reducing time, fuel, and delivery costs.

Is there any real world solution to TSP and VRP?

Many solutions for TSP and VRP are based on academics which means they are not so practical in everyday life. The reason is that many of them are just limited to perfection, but need a dynamic programming-based solution. So, if businesses really want to get rid of them, they need a TSP solver integrated with route optimization software.

The right TSP solver will help you disperse such modern challenges. It offers in-built route planning and optimization solutions in such a way that your tradesman doesn’t get stranded while delivering the parcel. Also, it is equipped with an efficient algorithm that provides true solutions to the TSP. As a result, the delivery manager can create a route plan hassle-free in a few minutes.

Can a Route Planner Resolve the Traveling Salesman Problem (TSP)?

In the general case, the Traveling Salesman Problem (TSP) involves finding the shortest optimized and possible route that includes a set of stops and returns to the starting point. The number of possible routes increases exponentially as the number of locations increases. Finding the best solution becomes difficult computationally, even for moderately sized problems.

But, Upper Route Planner, a route optimization software , is built differently. Upper has all the solutions you need when talking about TSP.

For example, if you are in charge of planning delivery routes with more than 500 stops in them, all you need to do is import an Excel or CSV file with multiple addresses into Upper, review, allot delivery drivers, optimize, and dispatch with a single click. This delivery route planning solution saves you hours of time spent on planning delivery routes and optimizing them.

Also, once the delivery is completed, Upper lets you collect proof of delivery. This is how the Upper Route Planner is a simple solution to the Traveling Salesman Problem.

Upper Route Planner

A simple-to-use route planner that every one is talking about

TSP stands for traveling Salesman Problem, while VRP is an abbreviation form of vehicle routing problem (VRP). In the delivery industry, both of them are widely known by their abbreviation form.

Yes, you can prevent TSP by using the right route planner. The online route planner helps you get the optimized path so that your delivery agents don’t have to deal with such challenges. In addition, they don’t struggle with multiple routes. Instead, they can progress on the shortest route.

The new method has made it possible to find solutions that are almost as good. This was done by the Christofides algorithm, the popular algorithm in theoretical computer science. This algorithm plugs into an alternate version of the problem that finds a combination of paths as per permutations of cities. It made the round trip route much longer. The round trip produced by the new method, while still not being efficient enough is better than the old one.

The vehicle routing problem (VRP) reduces the transportation costs as well as drivers’ expenses. It helps you serve more customers with fewer fleets and drivers. Thus, you don’t have any variation in the time taken to travel.

Create Optimized Routes Using Upper and Bid Goodbye to Traveling Salesman Problem

As a business owner, If you are dealing with TSP and want to get rid of them, we recommend using a TSP solver like Upper Route Planner. The online route planner is capable of plucking out the most efficient routes no matter how big your TSP is. It has an in-built sophisticated algorithm that helps you get the optimized path in a matter of seconds.

Therefore, you won’t fall prey to such real-world problems and perform deliveries in minimum time. Upper’s delivery route planner offers a dedicated driver app that makes sure your tradesman doesn’t go wrongfooted and quickly wraps up pending deliveries. Don’t just agree with our words, book a demo on Upper and disperse TSP once and for all.

Rakesh Patel, author of two defining books on reverse geotagging, is a trusted authority in routing and logistics. His innovative solutions at Upper Route Planner have simplified logistics for businesses across the board. A thought leader in the field, Rakesh's insights are shaping the future of modern-day logistics, making him your go-to expert for all things route optimization. Read more.

Sign Up Now!

Get weekly updates from Upper Route Planner.

Tired of Manual Routing?

Related Posts

Sales Route Planning Guide: Know How to Plan the Best Sales Routes

Sign Up with Upper Route Planner and automate your daily business process route planning, scheduling, and optimizing!

Life's Complicated Enough. Your Routing doesn't have to be.

Plan routes, manage drivers and stops, send timely customer notifications, collect proof of delivery and much more with just a few clicks.

https://www.upperinc.com/guides/travelling-salesman-problem/

Grab a FREE Trial of Upper

- Plan routes with hundreds of stops in a minute

- Schedule routes months in advance

- Collect reliable proof of delivery

- Track drivers live for real-time updates

- Experience unparalleled customer support

Grab a FREE Trial of Upper TODAY!

- Schedule routes in advance for weeks

- Collect proof of delivery to maintain accountability

- Experience 24/7 customer support

- Smart reporting to get real-time insights

12.9 Traveling Salesperson Problem

Learning objectives.

After completing this section, you should be able to:

- Distinguish between brute force algorithms and greedy algorithms.

- List all distinct Hamilton cycles of a complete graph.

- Apply brute force method to solve traveling salesperson applications.

- Apply nearest neighbor method to solve traveling salesperson applications.

We looked at Hamilton cycles and paths in the previous sections Hamilton Cycles and Hamilton Paths . In this section, we will analyze Hamilton cycles in complete weighted graphs to find the shortest route to visit a number of locations and return to the starting point. Besides the many routing applications in which we need the shortest distance, there are also applications in which we search for the route that is least expensive or takes the least time. Here are a few less common applications that you can read about on a website set up by the mathematics department at the University of Waterloo in Ontario, Canada:

- Design of fiber optic networks

- Minimizing fuel expenses for repositioning satellites

- Development of semi-conductors for microchips

- A technique for mapping mammalian chromosomes in genome sequencing

Before we look at approaches to solving applications like these, let's discuss the two types of algorithms we will use.

Brute Force and Greedy Algorithms

An algorithm is a sequence of steps that can be used to solve a particular problem. We have solved many problems in this chapter, and the procedures that we used were different types of algorithms. In this section, we will use two common types of algorithms, a brute force algorithm and a greedy algorithm . A brute force algorithm begins by listing every possible solution and applying each one until the best solution is found. A greedy algorithm approaches a problem in stages, making the apparent best choice at each stage, then linking the choices together into an overall solution which may or may not be the best solution.

To understand the difference between these two algorithms, consider the tree diagram in Figure 12.187 . Suppose we want to find the path from left to right with the largest total sum. For example, branch A in the tree diagram has a sum of 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36 .

To be certain that you pick the branch with greatest sum, you could list each sum from each of the different branches:

A : 10 + 2 + 11 + 13 = 36 10 + 2 + 11 + 13 = 36

B : 10 + 2 + 11 + 8 = 31 10 + 2 + 11 + 8 = 31

C : 10 + 2 + 15 + 1 = 28 10 + 2 + 15 + 1 = 28

D : 10 + 2 + 15 + 6 = 33 10 + 2 + 15 + 6 = 33

E : 10 + 7 + 3 + 20 = 40 10 + 7 + 3 + 20 = 40

F : 10 + 7 + 3 + 14 = 34 10 + 7 + 3 + 14 = 34

G : 10 + 7 + 4 + 11 = 32 10 + 7 + 4 + 11 = 32

H : 10 + 7 + 4 + 5 = 26 10 + 7 + 4 + 5 = 26

Then we know with certainty that branch E has the greatest sum.

Now suppose that you wanted to find the branch with the highest value, but you only were shown the tree diagram in phases, one step at a time.

After phase 1, you would have chosen the branch with 10 and 7. So far, you are following the same branch. Let’s look at the next phase.

After phase 2, based on the information you have, you will choose the branch with 10, 7 and 4. Now, you are following a different branch than before, but it is the best choice based on the information you have. Let’s look at the last phase.

After phase 3, you will choose branch G which has a sum of 32.

The process of adding the values on each branch and selecting the highest sum is an example of a brute force algorithm because all options were explored in detail. The process of choosing the branch in phases, based on the best choice at each phase is a greedy algorithm. Although a brute force algorithm gives us the ideal solution, it can take a very long time to implement. Imagine a tree diagram with thousands or even millions of branches. It might not be possible to check all the sums. A greedy algorithm, on the other hand, can be completed in a relatively short time, and generally leads to good solutions, but not necessarily the ideal solution.

Example 12.42

Distinguishing between brute force and greedy algorithms.

A cashier rings up a sale for $4.63 cents in U.S. currency. The customer pays with a $5 bill. The cashier would like to give the customer $0.37 in change using the fewest coins possible. The coins that can be used are quarters ($0.25), dimes ($0.10), nickels ($0.05), and pennies ($0.01). The cashier starts by selecting the coin of highest value less than or equal to $0.37, which is a quarter. This leaves $ 0.37 − $ 0.25 = $ 0.12 $ 0.37 − $ 0.25 = $ 0.12 . The cashier selects the coin of highest value less than or equal to $0.12, which is a dime. This leaves $ 0.12 − $ 0.10 = $ 0.02 $ 0.12 − $ 0.10 = $ 0.02 . The cashier selects the coin of highest value less than or equal to $0.02, which is a penny. This leaves $ 0.02 − $ 0.01 = $ 0.01 $ 0.02 − $ 0.01 = $ 0.01 . The cashier selects the coin of highest value less than or equal to $0.01, which is a penny. This leaves no remainder. The cashier used one quarter, one dime, and two pennies, which is four coins. Use this information to answer the following questions.

- Is the cashier’s approach an example of a greedy algorithm or a brute force algorithm? Explain how you know.

- The cashier’s solution is the best solution. In other words, four is the fewest number of coins possible. Is this consistent with the results of an algorithm of this kind? Explain your reasoning.

- The approach the cashier used is an example of a greedy algorithm, because the problem was approached in phases and the best choice was made at each phase. Also, it is not a brute force algorithm, because the cashier did not attempt to list out all possible combinations of coins to reach this conclusion.

- Yes, it is consistent. A greedy algorithm does not always yield the best result, but sometimes it does.

Your Turn 12.42

The traveling salesperson problem.

Now let’s focus our attention on the graph theory application known as the traveling salesperson problem (TSP) in which we must find the shortest route to visit a number of locations and return to the starting point.

Recall from Hamilton Cycles , the officer in the U.S. Air Force who is stationed at Vandenberg Air Force base and must drive to visit three other California Air Force bases before returning to Vandenberg. The officer needed to visit each base once. We looked at the weighted graph in Figure 12.192 representing the four U.S. Air Force bases: Vandenberg, Edwards, Los Angeles, and Beal and the distances between them.

Any route that visits each base and returns to the start would be a Hamilton cycle on the graph. If the officer wants to travel the shortest distance, this will correspond to a Hamilton cycle of lowest weight. We saw in Table 12.11 that there are six distinct Hamilton cycles (directed cycles) in a complete graph with four vertices, but some lie on the same cycle (undirected cycle) in the graph.

Since the distance between bases is the same in either direction, it does not matter if the officer travels clockwise or counterclockwise. So, there are really only three possible distances as shown in Figure 12.193 .

The possible distances are:

So, a Hamilton cycle of least weight is V → B → E → L → V (or the reverse direction). The officer should travel from Vandenberg to Beal to Edwards, to Los Angeles, and back to Vandenberg.

Finding Weights of All Hamilton Cycles in Complete Graphs

Notice that we listed all of the Hamilton cycles and found their weights when we solved the TSP about the officer from Vandenberg. This is a skill you will need to practice. To make sure you don't miss any, you can calculate the number of possible Hamilton cycles in a complete graph. It is also helpful to know that half of the directed cycles in a complete graph are the same cycle in reverse direction, so, you only have to calculate half the number of possible weights, and the rest are duplicates.

In a complete graph with n n vertices,

- The number of distinct Hamilton cycles is ( n − 1 ) ! ( n − 1 ) ! .

- There are at most ( n − 1 ) ! 2 ( n − 1 ) ! 2 different weights of Hamilton cycles.

TIP! When listing all the distinct Hamilton cycles in a complete graph, you can start them all at any vertex you choose. Remember, the cycle a → b → c → a is the same cycle as b → c → a → b so there is no need to list both.

Example 12.43

Calculating possible weights of hamilton cycles.

Suppose you have a complete weighted graph with vertices N, M, O , and P .

- Use the formula ( n − 1 ) ! ( n − 1 ) ! to calculate the number of distinct Hamilton cycles in the graph.

- Use the formula ( n − 1 ) ! 2 ( n − 1 ) ! 2 to calculate the greatest number of different weights possible for the Hamilton cycles.

- Are all of the distinct Hamilton cycles listed here? How do you know? Cycle 1: N → M → O → P → N Cycle 2: N → M → P → O → N Cycle 3: N → O → M → P → N Cycle 4: N → O → P → M → N Cycle 5: N → P → M → O → N Cycle 6: N → P → O → M → N

- Which pairs of cycles must have the same weights? How do you know?

- There are 4 vertices; so, n = 4 n = 4 . This means there are ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 ( n − 1 ) ! = ( 4 − 1 ) ! = 3 ⋅ 2 ⋅ 1 = 6 distinct Hamilton cycles beginning at any given vertex.

- Since n = 4 n = 4 , there are ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 ( n − 1 ) ! 2 = ( 4 − 1 ) ! 2 = 6 2 = 3 possible weights.

- Yes, they are all distinct cycles and there are 6 of them.

- Cycles 1 and 6 have the same weight, Cycles 2 and 4 have the same weight, and Cycles 3 and 5 have the same weight, because these pairs follow the same route through the graph but in reverse.

TIP! When listing the possible cycles, ignore the vertex where the cycle begins and ends and focus on the ways to arrange the letters that represent the vertices in the middle. Using a systematic approach is best; for example, if you must arrange the letters M, O, and P, first list all those arrangements beginning with M, then beginning with O, and then beginning with P, as we did in Example 12.42.

Your Turn 12.43

The brute force method.

The method we have been using to find a Hamilton cycle of least weight in a complete graph is a brute force algorithm, so it is called the brute force method . The steps in the brute force method are:

Step 1: Calculate the number of distinct Hamilton cycles and the number of possible weights.

Step 2: List all possible Hamilton cycles.

Step 3: Find the weight of each cycle.

Step 4: Identify the Hamilton cycle of lowest weight.

Example 12.44

Applying the brute force method.

On the next assignment, the air force officer must leave from Travis Air Force base, visit Beal, Edwards, and Vandenberg Air Force bases each exactly once and return to Travis Air Force base. There is no need to visit Los Angeles Air Force base. Use Figure 12.194 to find the shortest route.

Step 1: Since there are 4 vertices, there will be ( 4 − 1 ) ! = 3 ! = 6 ( 4 − 1 ) ! = 3 ! = 6 cycles, but half of them will be the reverse of the others; so, there will be ( 4 − 1 ) ! 2 = 6 2 = 3 ( 4 − 1 ) ! 2 = 6 2 = 3 possible distances.

Step 2: List all the Hamilton cycles in the subgraph of the graph in Figure 12.195 .

To find the 6 cycles, focus on the three vertices in the middle, B, E, and V . The arrangements of these vertices are BEV, BVE, EBV, EVB, VBE , and VEB . These would correspond to the 6 cycles:

1: T → B → E → V → T

2: T → B → V → E → T

3: T → E → B → V → T

4: T → E → V → B → T

5: T → V → B → E → T

6: T → V → E → B → T

Step 3: Find the weight of each path. You can reduce your work by observing the cycles that are reverses of each other.

1: 84 + 410 + 207 + 396 = 1097 84 + 410 + 207 + 396 = 1097

2: 84 + 396 + 207 + 370 = 1071 84 + 396 + 207 + 370 = 1071

3: 370 + 410 + 396 + 396 = 1572 370 + 410 + 396 + 396 = 1572

4: Reverse of cycle 2, 1071

5: Reverse of cycle 3, 1572

6: Reverse of cycle 1, 1097

Step 4: Identify a Hamilton cycle of least weight.

The second path, T → B → V → E → T , and its reverse, T → E → V → B → T , have the least weight. The solution is that the officer should travel from Travis Air Force base to Beal Air Force Base, to Vandenberg Air Force base, to Edwards Air Force base, and return to Travis Air Force base, or the same route in reverse.

Your Turn 12.44

Now suppose that the officer needed a cycle that visited all 5 of the Air Force bases in Figure 12.194 . There would be ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 ( 5 − 1 ) ! = 4 ! = 4 × 3 × 2 × 1 = 24 different arrangements of vertices and ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 ( 5 − 1 ) ! 2 = 4 ! 2 = 24 2 = 12 distances to compare using the brute force method. If you consider 10 Air Force bases, there would be ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 ( 10 − 1 ) ! = 9 ! = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 = 362 , 880 different arrangements and ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 ( 10 − 1 ) ! 2 = 9 ! 2 = 9 ⋅ 8 ⋅ 7 ⋅ 6 ⋅ 5 ⋅ 4 ⋅ 3 ⋅ 2 ⋅ 1 2 = 181 , 440 distances to consider. There must be another way!

The Nearest Neighbor Method

When the brute force method is impractical for solving a traveling salesperson problem, an alternative is a greedy algorithm known as the nearest neighbor method , which always visit the closest or least costly place first. This method finds a Hamilton cycle of relatively low weight in a complete graph in which, at each phase, the next vertex is chosen by comparing the edges between the current vertex and the remaining vertices to find the lowest weight. Since the nearest neighbor method is a greedy algorithm, it usually doesn’t give the best solution, but it usually gives a solution that is "good enough." Most importantly, the number of steps will be the number of vertices. That’s right! A problem with 10 vertices requires 10 steps, not 362,880. Let’s look at an example to see how it works.

Suppose that a candidate for governor wants to hold rallies around the state. They plan to leave their home in city A , visit cities B, C, D, E , and F each once, and return home. The airfare between cities is indicated in the graph in Figure 12.196 .

Let’s help the candidate keep costs of travel down by applying the nearest neighbor method to find a Hamilton cycle that has a reasonably low weight. Begin by marking starting vertex as V 1 V 1 for "visited 1st." Then to compare the weights of the edges between A and vertices adjacent to A : $250, $210, $300, $200, and $100 as shown in Figure 12.197 . The lowest of these is $100, which is the edge between A and F .

Mark F as V 2 V 2 for "visited 2nd" then compare the weights of the edges between F and the remaining vertices adjacent to F : $170, $330, $150 and $350 as shown in Figure 12.198 . The lowest of these is $150, which is the edge between F and D .

Mark D as V 3 V 3 for "visited 3rd." Next, compare the weights of the edges between D and the remaining vertices adjacent to D : $120, $310, and $270 as shown in Figure 12.199 . The lowest of these is $120, which is the edge between D and B .

So, mark B as V 4 V 4 for "visited 4th." Finally, compare the weights of the edges between B and the remaining vertices adjacent to B : $160 and $220 as shown in Figure 12.200 . The lower amount is $160, which is the edge between B and E .

Now you can mark E as V 5 V 5 and mark the only remaining vertex, which is C , as V 6 V 6 . This is shown in Figure 12.201 . Make a note of the weight of the edge from E to C , which is $180, and from C back to A , which is $210.

The Hamilton cycle we found is A → F → D → B → E → C → A . The weight of the circuit is $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 $ 100 + $ 150 + $ 120 + $ 160 + $ 180 + $ 210 = $ 920 . This may or may not be the route with the lowest cost, but there is a good chance it is very close since the weights are most of the lowest weights on the graph and we found it in six steps instead of finding 120 different Hamilton cycles and calculating 60 weights. Let’s summarize the procedure that we used.

Step 1: Select the starting vertex and label V 1 V 1 for "visited 1st." Identify the edge of lowest weight between V 1 V 1 and the remaining vertices.

Step 2: Label the vertex at the end of the edge of lowest weight that you found in previous step as V n V n where the subscript n indicates the order the vertex is visited. Identify the edge of lowest weight between V n V n and the vertices that remain to be visited.

Step 3: If vertices remain that have not been visited, repeat Step 2. Otherwise, a Hamilton cycle of low weight is V 1 → V 2 → ⋯ → V n → V 1 V 1 → V 2 → ⋯ → V n → V 1 .

Example 12.45

Using the nearest neighbor method.

Suppose that the candidate for governor wants to hold rallies around the state but time before the election is very limited. They would like to leave their home in city A , visit cities B , C , D , E , and F each once, and return home. The airfare between cities is not as important as the time of travel, which is indicated in Figure 12.202 . Use the nearest neighbor method to find a route with relatively low travel time. What is the total travel time of the route that you found?

Step 1: Label vertex A as V 1 V 1 . The edge of lowest weight between A and the remaining vertices is 85 min between A and D .

Step 2: Label vertex D as V 2 V 2 . The edge of lowest weight between D and the vertices that remain to be visited, B, C, E , and F , is 70 min between D and F .

Repeat Step 2: Label vertex F as V 3 V 3 . The edge of lowest weight between F and the vertices that remain to be visited, B, C, and E , is 75 min between F and C .

Repeat Step 2: Label vertex C as V 4 V 4 . The edge of lowest weight between C and the vertices that remain to be visited, B and E , is 100 min between C and B .

Repeat Step 2: Label vertex B as V 5 V 5 . The only vertex that remains to be visited is E . The weight of the edge between B and E is 95 min.

Step 3: A Hamilton cycle of low weight is A → D → F → C → B → E → A . So, a route of relatively low travel time is A to D to F to C to B to E and back to A . The total travel time of this route is: 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min 85 min + 70 min + 75 min + 100 min + 95 min + 90 min = 515 min or 8 hrs 35 min

Your Turn 12.45

Check your understanding, section 12.9 exercises.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Authors: Donna Kirk

- Publisher/website: OpenStax

- Book title: Contemporary Mathematics

- Publication date: Mar 22, 2023

- Location: Houston, Texas

- Book URL: https://openstax.org/books/contemporary-mathematics/pages/1-introduction

- Section URL: https://openstax.org/books/contemporary-mathematics/pages/12-9-traveling-salesperson-problem

© Dec 21, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

- Graph Data Structure And Algorithms

- Introduction to Graphs - Data Structure and Algorithm Tutorials

- Graph and its representations

- Types of Graphs with Examples

- Basic Properties of a Graph

- Applications, Advantages and Disadvantages of Graph

- Transpose graph

- Difference Between Graph and Tree

BFS and DFS on Graph

- Breadth First Search or BFS for a Graph

- Depth First Search or DFS for a Graph

- Applications, Advantages and Disadvantages of Depth First Search (DFS)

- Applications, Advantages and Disadvantages of Breadth First Search (BFS)

- Iterative Depth First Traversal of Graph

- BFS for Disconnected Graph

- Transitive Closure of a Graph using DFS

- Difference between BFS and DFS

Cycle in a Graph

- Detect Cycle in a Directed Graph

- Detect cycle in an undirected graph

- Detect Cycle in a directed graph using colors

- Detect a negative cycle in a Graph | (Bellman Ford)

- Cycles of length n in an undirected and connected graph

- Detecting negative cycle using Floyd Warshall

- Clone a Directed Acyclic Graph

Shortest Paths in Graph

- How to find Shortest Paths from Source to all Vertices using Dijkstra's Algorithm

- Bellman–Ford Algorithm

- Floyd Warshall Algorithm

- Johnson's algorithm for All-pairs shortest paths

- Shortest Path in Directed Acyclic Graph

- Multistage Graph (Shortest Path)

- Shortest path in an unweighted graph

- Karp's minimum mean (or average) weight cycle algorithm

- 0-1 BFS (Shortest Path in a Binary Weight Graph)

- Find minimum weight cycle in an undirected graph

Minimum Spanning Tree in Graph

- Kruskal’s Minimum Spanning Tree (MST) Algorithm

- Difference between Prim's and Kruskal's algorithm for MST

- Applications of Minimum Spanning Tree

- Total number of Spanning Trees in a Graph

- Minimum Product Spanning Tree

- Reverse Delete Algorithm for Minimum Spanning Tree

Topological Sorting in Graph

- Topological Sorting

- All Topological Sorts of a Directed Acyclic Graph

- Kahn's algorithm for Topological Sorting

- Maximum edges that can be added to DAG so that it remains DAG

- Longest Path in a Directed Acyclic Graph

- Topological Sort of a graph using departure time of vertex

Connectivity of Graph

- Articulation Points (or Cut Vertices) in a Graph

- Biconnected Components

- Bridges in a graph

- Eulerian path and circuit for undirected graph

- Fleury's Algorithm for printing Eulerian Path or Circuit

- Strongly Connected Components

- Count all possible walks from a source to a destination with exactly k edges

- Euler Circuit in a Directed Graph

- Word Ladder (Length of shortest chain to reach a target word)

- Find if an array of strings can be chained to form a circle | Set 1

- Tarjan's Algorithm to find Strongly Connected Components

- Paths to travel each nodes using each edge (Seven Bridges of Königsberg)

- Dynamic Connectivity | Set 1 (Incremental)

Maximum flow in a Graph

- Max Flow Problem Introduction

- Ford-Fulkerson Algorithm for Maximum Flow Problem

- Find maximum number of edge disjoint paths between two vertices

- Find minimum s-t cut in a flow network

- Maximum Bipartite Matching

- Channel Assignment Problem

- Introduction to Push Relabel Algorithm

- Introduction and implementation of Karger's algorithm for Minimum Cut

- Dinic's algorithm for Maximum Flow

Some must do problems on Graph